What is a feature store?

This page explains what a feature store is and what benefits it provides, and the specific advantages of Databricks Feature Store.

A feature store is a centralized repository that enables data scientists to find and share features and also ensures that the same code used to compute the feature values is used for model training and inference.

Machine learning uses existing data to build a model to predict future outcomes. In almost all cases, the raw data requires preprocessing and transformation before it can be used to build a model. This process is called feature engineering, and the outputs of this process are called features - the building blocks of the model.

Developing features is complex and time-consuming. An additional complication is that for machine learning, feature calculations need to be done for model training, and then again when the model is used to make predictions. These implementations may not be done by the same team or using the same code environment, which can lead to delays and errors. Also, different teams in an organization will often have similar feature needs but may not be aware of work that other teams have done. A feature store is designed to address these problems.

Why use Databricks Feature Store?

Databricks Feature Store is fully integrated with other components of Databricks.

Discoverability. The Feature Store UI, accessible from the Databricks workspace, lets you browse and search for existing features.

Lineage. When you create a feature table in Databricks, the data sources used to create the feature table are saved and accessible. For each feature in a feature table, you can also access the models, notebooks, jobs, and endpoints that use the feature.

Integration with model scoring and serving. When you use features from Feature Store to train a model, the model is packaged with feature metadata. When you use the model for batch scoring or online inference, it automatically retrieves features from Feature Store. The caller does not need to know about them or include logic to look up or join features to score new data. This makes model deployment and updates much easier.

Point-in-time lookups. Feature Store supports time series and event-based use cases that require point-in-time correctness.

Feature Engineering in Unity Catalog

With Databricks Runtime 13.3 LTS and above, if your workspace is enabled for Unity Catalog, Unity Catalog becomes your feature store. You can use any Delta table or Delta Live Table in Unity Catalog with a primary key as a feature table for model training or inference. Unity Catalog provides feature discovery, governance, lineage, and cross-workspace access.

How does Databricks Feature Store work?

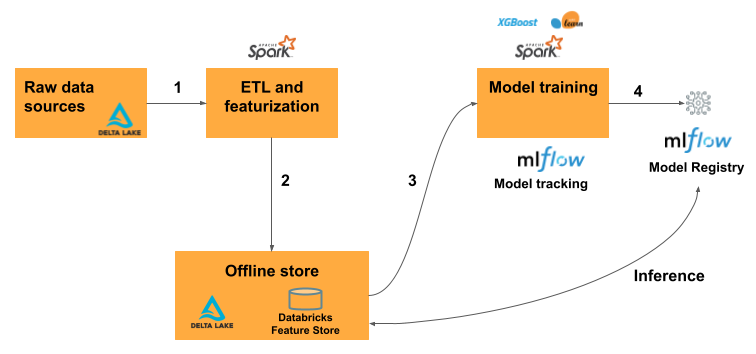

The typical machine learning workflow using Feature Store follows this path:

Write code to convert raw data into features and create a Spark DataFrame containing the desired features.

For workspaces that are enabled for Unity Catalog, write the DataFrame as a feature table in Unity Catalog. If your workspace is not enabled for Unity Catalog, write the DataFrame as a feature table in the Workspace Feature Store.

Train a model using features from the feature store. When you do this, the model stores the specifications of features used for training. When the model is used for inference, it automatically joins features from the appropriate feature tables.

Register model in Model Registry.

You can now use the model to make predictions on new data. The model automatically retrieves the features it needs from Feature Store.

Start using Feature Store

See the following articles to get started with Feature Store:

Try one of the example notebooks that illustrate feature store capabilities.

See the reference material for the Feature Store Python API.

Learn about training models with Feature Store.

Learn about Feature Engineering in Unity Catalog.

Learn about the Workspace Feature Store.

Use time series feature tables and point-in-time lookups to retrieve the latest feature values as of a particular time for training or scoring a model.

When you use Feature Engineering in Unity Catalog, you use Unity Catalog privileges to control access the feature tables. The following link is for the Workspace Feature Store only:

Supported data types

Feature Engineering in Unity Catalog and Workspace Feature Store support the following PySpark data types:

IntegerTypeFloatTypeBooleanTypeStringTypeDoubleTypeLongTypeTimestampTypeDateTypeShortTypeArrayTypeBinaryType[1]DecimalType[1]MapType[1]

[1] BinaryType, DecimalType, and MapType are supported in all versions of Feature Engineering in Unity Catalog and in Workspace Feature Store v0.3.5 or above.

The data types listed above support feature types that are common in machine learning applications. For example:

You can store dense vectors, tensors, and embeddings as

ArrayType.You can store sparse vectors, tensors, and embeddings as

MapType.You can store text as

StringType.

When published to online stores, ArrayType and MapType features are stored in JSON format.

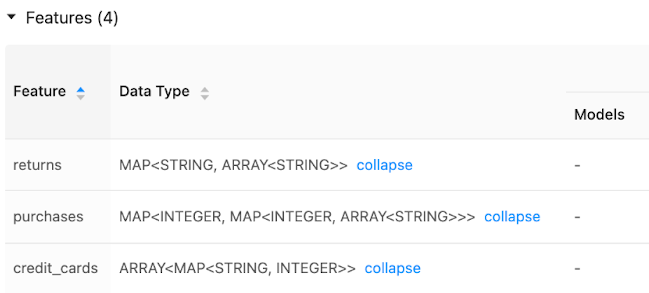

The Feature Store UI displays metadata on feature data types:

More information

For more information on best practices for using Feature Store, download The Comprehensive Guide to Feature Stores.