Configure settings for Databricks jobs

This article provides details on configuring Databricks Jobs and individual job tasks in the Jobs UI. To learn about using the Databricks CLI to edit job settings, run the CLI command databricks jobs update -h. To learn about using the Jobs API, see the Jobs API.

Some configuration options are available on the job, and other options are available on individual tasks. For example, the maximum concurrent runs can be set only on the job, while retry policies are defined for each task.

Edit a job

To change the configuration for a job:

Click

Workflows in the sidebar.

Workflows in the sidebar.In the Name column, click the job name.

The side panel displays the Job details. You can change the trigger for the job, compute configuration, notifications, the maximum number of concurrent runs, configure duration thresholds, and add or change tags. If job access control is enabled, you can also edit job permissions.

Add parameters for all job tasks

You can configure parameters on a job that are passed to any of the job’s tasks that accept key-value parameters, including Python wheel files configured to accept keyword arguments. Parameters set at the job level are added to configured task-level parameters. Job parameters passed to tasks are visible in the task configuration, along with any parameters configured on the task.

You can also pass job parameters to tasks that are not configured with key-value parameters such as JAR or Spark Submit tasks. To pass job parameters to these tasks, format arguments as {{job.parameters.[name]}}, replacing [name] with the key that identifies the parameter.

Job parameters take precedence over task parameters. If a job parameter and a task parameter have the same key, the job parameter overrides the task parameter.

You can override configured job parameters or add new job parameters when you run a job with different parameters or repair a job run.

You can also share context about jobs and tasks using a set of dynamic value references.

To add job parameters, click Edit parameters in the Job details side panel and specify the key and default value of each parameter. To view a list of available dynamic value references, click Browse dynamic values.

Add tags to a job

To add labels or key:value attributes to your job, you can add tags when you edit the job. You can use tags to filter jobs in the Jobs list; for example, you can use a department tag to filter all jobs that belong to a specific department.

Note

Because job tags are not designed to store sensitive information such as personally identifiable information or passwords, Databricks recommends using tags for non-sensitive values only.

Tags also propagate to job clusters created when a job is run, allowing you to use tags with your existing cluster monitoring.

To add or edit tags, click + Tag in the Job details side panel. You can add the tag as a key and value or a label. To add a label, enter the label in the Key field and leave the Value field empty.

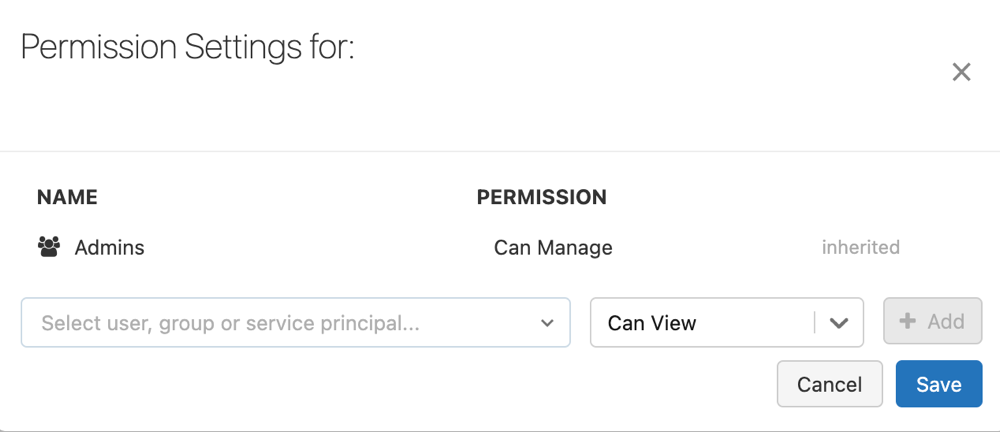

Control access to a job

Job access control enables job owners and administrators to grant fine-grained permissions on their jobs. Job owners can choose which other users or groups can view the job results. Owners can also choose who can manage their job runs (Run now and Cancel run permissions).

For information on job permission levels, see Job ACLs.

You must have CAN MANAGE or IS OWNER permission on the job in order to manage permissions on it.

In the sidebar, click Job Runs.

Click the name of a job.

In the Job details panel, click Edit permissions.

In Permission Settings, click the Select User, Group or Service Principal… drop-down menu and select a user, group, or service principal.

Click Add.

Click Save.

Manage the job owner

By default, the creator of a job has the IS OWNER permission and is the user in the job’s Run as setting. Job’s run as the identity of the user in the Run as setting. For more information on the Run as setting, see Run a job as a service principal.

Workspace admins can change the job owner to themselves. When ownership is transferred, the previous owner is granted the CAN MANAGE permission

Note

When the RestrictWorkspaceAdmins setting on a workspace is set to ALLOW ALL, workspace admins can change a job owner to any user or service principal in their workspace. To restrict workspace admins to only change a job owner to themselves, see Restrict workspace admins.

Configure maximum concurrent runs

Click Edit concurrent runs under Advanced settings to set the maximum number of parallel runs for this job. Databricks skips the run if the job has already reached its maximum number of active runs when attempting to start a new run. Set this value higher than the default of 1 to perform multiple runs of the same job concurrently. This is useful, for example, if you trigger your job on a frequent schedule and want to allow consecutive runs to overlap with each other or you want to trigger multiple runs that differ by their input parameters.

Enable queueing of job runs

To enable runs of a job to be placed in a queue to run later when they cannot run immediately because of concurrency limits, click the Queue toggle under Advanced settings. See What if my job cannot run because of concurrency limits?.

Note

Queueing is enabled by default for jobs that were created through the UI after April 15, 2024.

Configure an expected completion time or a timeout for a job

You can configure optional duration thresholds for a job, including an expected completion time for the job and a maximum completion time for the job. To configure duration thresholds, click Set duration thresholds.

To configure an expected completion time for the job, enter the expected duration in the Warning field. If the job exceeds this threshold, you can configure notifications for the slow running job. See Configure notifications for slow running or late jobs.

To configure a maximum completion time for a job, enter the maximum duration in the Timeout field. If the job does not complete in this time, Databricks sets its status to “Timed Out” and the job is stopped.

Edit a task

To set task configuration options:

Click

Workflows in the sidebar.

Workflows in the sidebar.In the Name column, click the job name.

Click the Tasks tab and select the task to edit.

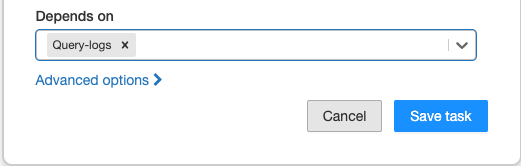

Define task dependencies

You can define the order of execution of tasks in a job using the Depends on drop-down menu. You can set this field to one or more tasks in the job.

Note

Depends on is not visible if the job consists of only one task.

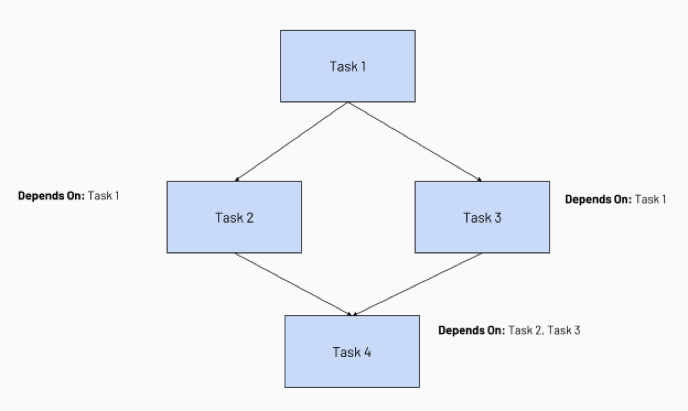

Configuring task dependencies creates a Directed Acyclic Graph (DAG) of task execution, a common way of representing execution order in job schedulers. For example, consider the following job consisting of four tasks:

Task 1 is the root task and does not depend on any other task.

Task 2 and Task 3 depend on Task 1 completing first.

Finally, Task 4 depends on Task 2 and Task 3 completing successfully.

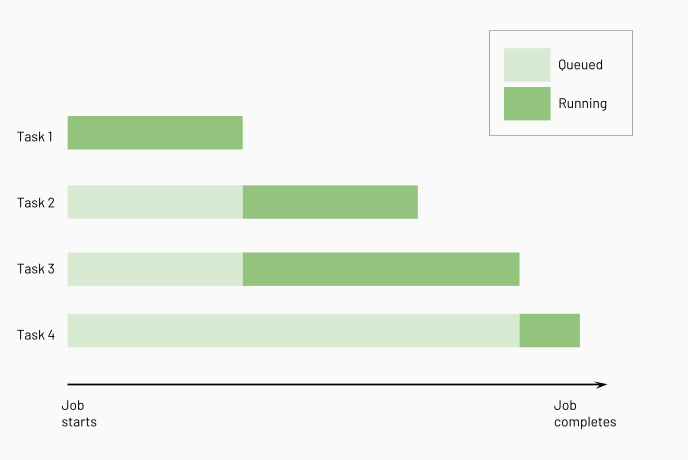

Databricks runs upstream tasks before running downstream tasks, running as many of them in parallel as possible. The following diagram illustrates the order of processing for these tasks:

Configure a cluster for a task

To configure the cluster where a task runs, click the Cluster drop-down menu. You can edit a shared job cluster, but you cannot delete a shared cluster if other tasks still use it.

To learn more about selecting and configuring clusters to run tasks, see Use Databricks compute with your jobs.

Configure dependent libraries

Dependent libraries will be installed on the cluster before the task runs. You must set all task dependencies to ensure they are installed before the run starts. Follow the recommendations in Manage library dependencies for specifying dependencies.

Configure an expected completion time or a timeout for a task

You can configure optional duration thresholds for a task, including an expected completion time for the task and a maximum completion time for the task. To configure duration thresholds, click Duration threshold.

To configure the task’s expected completion time, enter the duration in the Warning field. If the task exceeds this threshold, an event is triggered. You can use this event to notify when a task is running slowly. See Configure notifications for slow running or late jobs.

To configure a maximum completion time for a task, enter the maximum duration in the Timeout field. If the task does not complete in this time, Databricks sets its status to “Timed Out”.

Configure a retry policy for a task

To configure a policy that determines when and how many times failed task runs are retried, click + Add next to Retries. The retry interval is calculated in milliseconds between the start of the failed run and the subsequent retry run.

Note

If you configure both Timeout and Retries, the timeout applies to each retry.