Convert a Delta Live Tables pipeline into a Databricks Asset Bundle project

This article shows how to convert an existing Delta Live Tables (DLT) pipeline into a bundle project. Bundles enable you to define and manage your Databricks data processing configuration in a single, source-controlled YAML file that provides easier maintenance and enables automated deployment to target environments.

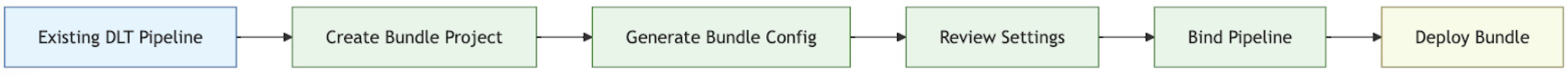

Conversion process overview

The steps you take to convert an existing pipeline to a bundle are:

Make sure you have access to a previously configured pipeline you want to convert to a bundle.

Create or prepare a folder (preferably in a source-controlled hierarchy) to store the bundle.

Generate a configuration for the bundle from the existing pipeline, using the Databricks CLI.

Review the generated bundle configuration to ensure it is complete.

Link the bundle to the original pipeline.

Deploy the pipeline to a target workspace using the bundle config.

Requirements

Before you start, you must have:

The Databricks CLI installed on your local development machine. Databricks CLI version 0.218.0 or above is required to use Databricks Asset Bundles.

The ID of an existing DLT pipeline you will manage with a bundle. To learn how you obtain this ID, see Get an existing pipeline definition using the UI.

Authorization for the Databricks workspace where the existing DLT pipeline runs. To configure authentication and authorization for your Databricks CLI calls, see Authenticate access to Databricks resources.

Step 1: Set up a folder for your bundle project

You must have access to a Git repository that is configured in Databricks as a Git folder. You will create your bundle project in this repository, which will apply source control and make it available to other collaborators through a Git folder in the corresponding Databricks workspace. (For more details on Git folders, see Git integration for Databricks Git folders.)

Go to the root of the cloned Git repository on your local machine.

At an appropriate place in the folder hierarchy, create a folder specifically for your bundle project. For example:

mkdir - p ~/source/my-pipelines/ingestion/events/my-bundle

Change your current working directory to this new folder. For example:

cd ~/source/my-pipelines/ingestion/events/my-bundle

Initialize a new bundle by running

databricks bundle initand answering the prompts. Once it completes, you will have a project configuration file nameddatabricks.ymlin the new home folder for your project. This file is required for deploying your pipeline from the command line. For more details on this configuration file, see Databricks Asset Bundle configuration.

Step 2: Generate the pipeline configuration

From this new directory in your cloned Git repository’s folder tree, run the following Databricks CLI command and provide the ID of your DLT pipeline as <pipeline-id>:

databricks bundle generate pipeline --existing-pipeline-id <pipeline-id> --profile <profile-name>

When you run the generate command, it creates a bundle configuration file for your pipeline in the bundle’s resources folder and downloads any referenced artifacts to the src folder. The --profile (or -p flag) is optional, but if you have a specific Databricks configuration profile (defined in your .databrickscfg file created when you installed the Databricks CLI) that you’d rather use instead of the default profile, provide it in this command. For information about Databricks configuration profiles, see Databricks configuration profiles.

Step 3: Review the bundle project files

When the bundle generate command completes, it will have created two new folders:

resourcesis is the project subdirectory that contains project configuration files.srcis the project folder where source files, such as queries and notebooks, are stored.

The command also creates some additional files:

*.pipeline.ymlunder theresourcessubdirectory. This file contains the specific configuration and settings for your DLT pipeline.Source files such as SQL queries under the

srcsubdirectory, copied from your existing DLT pipeline.

├── databricks.yml # Project configuration file created with the bundle init command

├── resources/

│ └── {your-pipeline-name.pipeline}.yml # Pipeline configuration

└── src/

└── {SQl-query-retrieved-from-your-existing-pipeline}.sql # Your pipeline's declarative query

Step 4: Bind the bundle pipeline to your existing pipeline

You must link, or bind, the pipeline definition in the bundle to your existing pipeline in order to keep it up to date as you make changes. To do this, run the following Databricks CLI command:

databricks bundle deployment bind <pipeline-name> <pipeline-ID> --profile <profile-name>

<pipeline-name> is the name of the pipeline. This name should be the same as the prefixed string value of the file name for the pipeline configuration in your new resources directory. For example, if you have a pipeline configuration file named ingestion_data_pipeline.pipeline.yml in your resources folder, then you must provide ingestion_data_pipeline as your pipeline name.

<pipeline-ID> is the ID for your pipeline. It is the same as the one you copied as part of the requirements for these instructions.

Step 5: Deploy your pipeline using your new bundle

Now, deploy your pipeline bundle to your target workspace using the bundle deploy Databricks CLI command:

databricks bundle deploy --target <target-name> --profile <profile-name>

The --target flag is required and must be set to a string that matches a configured target workspace name, such as development or production.

If this command is successful, you now have your DLT pipeline configuration in an external project that can be loaded into other workspaces and run, and easily shared with other Databricks users in your account.

Troubleshooting

Issue |

Solution |

|---|---|

“ |

Currently, the |

Existing pipeline settings don’t match the values in the generated pipeline YAML configuration |

The pipeline ID does not appear in the the bundle configuration YML file. If you notice any other missing settings, you can manually apply them. |

Tips for success

Always use version control. If you aren’t using Databricks Git folders, store your project subdirectories and files in a Git or other version-controlled repository or file system.

Test your pipeline in a non-production environment (such as a “development” or “test” environment) before deploying it to a production environment. It’s easy to introduce a misconfiguration by accident.

Additional resources

For more information about using bundles to define and manage data processing, see:

Develop Delta Live Tables pipelines with Databricks Asset Bundles. This topic covers creating a bundle for a new pipeline rather than an existing one, with a source-controlled notebook for processing that you provide.