Data lakehouse architecture: Databricks well-architected framework

This set of data lakehouse architecture articles provides principles and best practices for the implementation and operation of a lakehouse using Databricks.

Databricks well-architected framework for the lakehouse

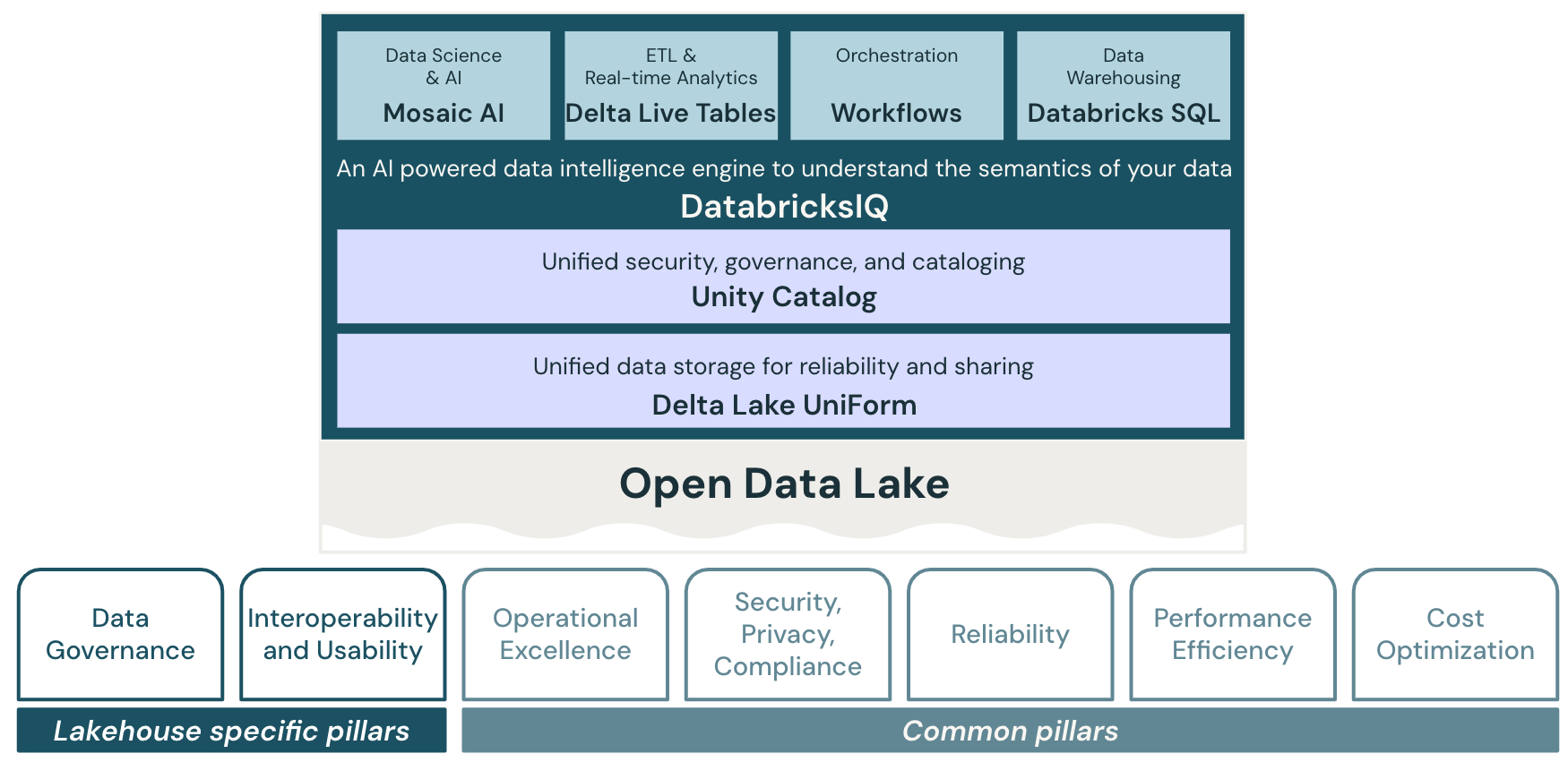

The well-architected lakehouse consists of 7 pillars that describe different areas of concern for the implementation of a data lakehouse in the cloud:

Data and AI governance

The oversight to ensure that data and AI bring value and support your business strategy.

Interoperability and usability

The ability of the lakehouse to interact with users and other systems.

Operational excellence

All operations processes that keep the lakehouse running in production.

Security, privacy, and compliance

Protect the Databricks application, customer workloads, and customer data from threats.

Reliability

The ability of a system to recover from failures and continue to function.

Performance efficiency

The ability of a system to adapt to changes in load.

Cost optimization

Managing costs to maximize the value delivered.

The well-architected lakehouse extends the Google Cloud Architecture Framework to the Databricks Data Intelligence Platform and shares the pillars “Operational Excellence,” “Security, privacy, and compliance,” “Reliability,” “Performance Optimization” (as “Performance Efficiency”), and “Cost Optimization.”

For these five pillars, the principles and best practices of the cloud framework still apply to the lakehouse. The well-architected lakehouse extends these with principles and best practices specific to the lakehouse and important to build an effective and efficient lakehouse.

The lakehouse-specific pillars

The pillars “Data and AI Governance” and “Interoperability and Usability” cover concerns specific to the lakehouse.

Data and AI governance encapsulates the policies and practices implemented to securely manage the data and AI assets within an organization. One of the fundamental aspects of a lakehouse is centralized data and AI governance: The lakehouse unifies data warehousing and AI use cases on a single platform. This simplifies the modern data stack by eliminating the data silos that traditionally separate and complicate data engineering, analytics, BI, data science, and machine learning. To simplify these governance tasks, the lakehouse offers a unified governance solution for data, analytics and AI. By minimizing the copies of your data and moving to a single data processing layer where all your data and AI governance controls can run together, you improve your chances of staying in compliance and detecting a data breach.

Another important tenet of the lakehouse is to provide a great user experience for all the personas that work with it, and to be able to interact with a wide ecosystem of external systems. Google Cloud already has a variety of data tools that perform most tasks a data-driven enterprise might need. However, these tools must be properly assembled to provide all the functionality, with each service offering a different user experience. This approach can lead to high implementation costs and typically does not provide the same user experience as a native lakehouse platform: Users are limited by inconsistencies between tools and a lack of collaboration capabilities, and often have to go through complex processes to gain access to the system and thus to the data.

An integrated lakehouse on the other side provides a consistent user experience across all workloads and therefore increases usability. This reduces training and onboarding costs and improves collaboration between functions. In addition, new features are automatically added over time - to further improve the user experience - without the need to invest internal resources and budgets.

A multi-cloud approach can be a deliberate strategy of a company or the result of mergers and acquisitions or independent business units selecting different cloud providers. In this case, using a multi-cloud lakehouse results in a unified user experience across all clouds. This reduces the proliferation of systems across the enterprise, which in turn reduces the skill and training requirements of employees involved in data-driven tasks.

Finally, in a networked world with cross-company business processes, systems must work together as seamlessly as possible. The degree of interoperability is a crucial criterion here, and the most recent data, as a core asset of any business, must flow securely between internal and external partners’ systems.