Enable Private Service Connect for your workspace

Note

You must contact your Databricks account team to request access to enable Private Service Connect on your workspace. Databricks support for private connectivity using Private Service Connect is generally available.

This feature requires the Premium plan.

Secure a workspace with private connectivity and mitigate data exfiltration risks by enabling Google Private Service Connect (PSC) on the workspace. This article includes some configuration steps that you can perform using either the Databricks account console or the API.

For API reference information for the PSC API endpoints, see the API Reference docs, especially sections for VPC endpoints, private access settings, network configurations, and workspaces.

Two Private Service Connect options

There are two ways that you can use private connectivity so that you don’t expose the traffic to the public network. This article discusses how to configure either one or both Private Service Connect connection types:

Front-end Private Service Connect (user to workspace): Allows users to connect to the Databricks web application, REST API, and Databricks Connect API over a Virtual Private Cloud (VPC) endpoint endpoint.

Back-end Private Service Connect (classic compute plane to control plane): Connects Databricks classic compute resources in a customer-managed Virtual Private Cloud (VPC) (the classic compute plane) to the Databricks workspace core services (the control plane). Clusters connect to the control plane for two destinations: Databricks REST APIs and the secure cluster connectivity relay. Because of the different destination services, this Private Service Connect connection type involves two different VPC interface endpoints. For information about the data and control planes, see Databricks architecture overview.

Note

Previously, Databricks referred to the compute plane as the data plane.

You can implement both front-end and back-end Private Service Connect or just one of them. If you implement Private Service Connect for both the front-end and back-end connections, you can optionally mandate private connectivity for the workspace, which means Databricks rejects any connections over the public network. If you decline to implement any one of these connection types, you cannot enforce this requirement.

To enable Private Service Connect, you must create Databricks configuration objects and add new fields to existing configuration objects.

Important

In this release, you can create a new workspace with Private Service Connect connectivity using a customer-managed VPC that you set up. You cannot add Private Service Connect connectivity to an existing workspace. You cannot enable Private Service Connect on a workspace that uses a Databricks-managed VPC.

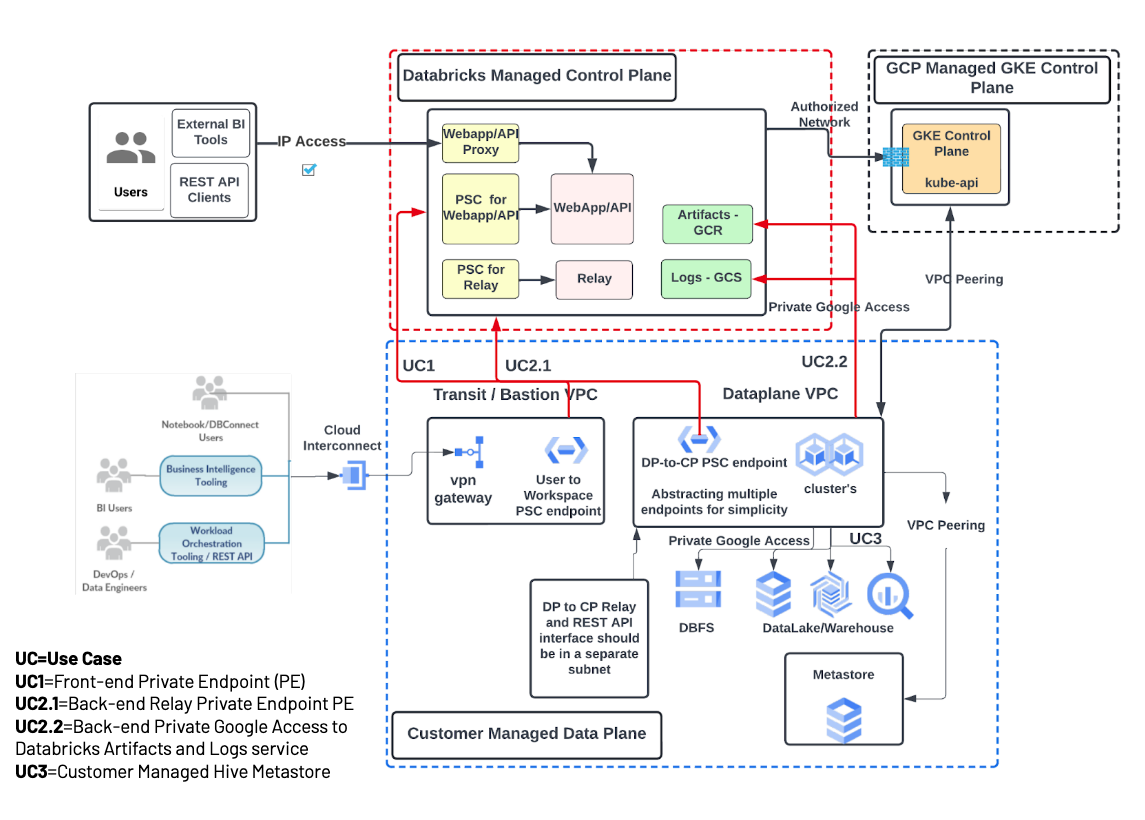

The following diagram is an overview of the Private Service Connect network flow and architecture with Databricks.

For more detailed diagrams and more information about using a firewall, see Reference architecture.

Security benefits

Using Private Service Connect helps mitigate the following data exfiltration risks:

Data access from a browser on the internet or an unauthorized network using the Databricks web application.

Data access from a client on the internet or an unauthorized network using the Databricks API.

Terminology

The following Google Cloud terms are used in this guide to describe Databricks configuration:

Google terminology |

Description |

|---|---|

Private Service Connect (PSC) |

A Google Cloud feature that provides private connectivity between VPC networks and Google Cloud services. |

Host project |

If you use what Google calls Shared VPCs, which allow you to use a different Google Cloud project for the VPC separate from the workspace’s main project ID for compute resources, this is the project in which the VPCs are created. This applies both to the classic compute plane VPC (for back-end Private Service Connect) and the transit VPC (for front-end Private Service Connect) |

Service project |

If you use what Google calls Shared VPCs, which allow you to use a different Google Cloud project for the VPC separate from the workspace’s main project ID for compute resources, this is the project for the workspace compute resources. |

Private Service Connect endpoint or VPC endpoint |

A private connection from a VPC network to services, for example services published by Databricks. |

The following table describes important terminology.

Databricks terminology |

Description |

|---|---|

Databricks client |

Either a user on a browser accessing the Databricks UI or an application client accessing the Databricks APIs. |

Transit VPC |

The VPC network hosting clients that access the Databricks workspace WebApp or APIs. |

Front-end (User to Workspace) Private Service Connect endpoint |

The Private Service Connect Endpoint configured on the transit VPC network that allows clients to privately connect to the Databricks web application and APIs. |

Back-end (classic compute plane to control plane) Private Service Connect endpoints |

The Private Service Connect Endpoints configured on your customer-managed VPC network to allow private communication between the classic compute plane and the Databricks control plane. |

Classic compute plane VPC |

The VPC network that hosts the compute resources of your Databricks workspace. You configure your customer-managed compute plane VPC in your Google Cloud organization. |

Private workspace |

Refers to a workspace where the virtual machines of the classic compute plane do not have any public IP address. The workspace endpoints on the Databricks control plane can only be accessed privately from authorized VPC networks or authorized IP addresses, such as the VPC for your classic compute plane or your PSC transit VPCs. |

Requirements and limitations

The following requirements and limitations apply:

New workspaces only: You can create a new workspace with Private Service Connect connectivity. You cannot add Private Service Connect connectivity to an existing workspace.

Customer-managed VPC is required: You must use a customer-managed VPC. You need to create your VPC in the Google Cloud console or with another tool. Next, in the Databricks account console or the API, you create a network configuration that references your VPC and sets additional fields that are specific to Private Service Connect.

Enable your account: Databricks must enable your account for the feature. To enable Private Service Connect on one or more workspaces, contact your Databricks account team and request to enable it on your account. Provide the Google Cloud region and your host project ID to reserve quota for Private Service Connect connections. After your account is enabled for Private Service Connect, use the Databricks account console or the API to configure your Private Service Connect objects and create new workspaces.

Quotas: You can configure up to two Private Service Connect endpoints to the Databricks service for each VPC host project. You can deploy classic compute planes for multiple Databricks workspaces on the same VPC network. In such a scenario, all those workspaces will share the same Private Service Connect endpoints. Please contact your account team if this limitation does not work for you.

No cross-region connectivity: Private Service Connect workspace components must be in the same region including:

Transit VPC network and subnets

Compute plane VPC network and subnets

Databricks workspace

Private Service Connect endpoints

Private Service Connect endpoint subnets

Sample datasets are not available. Sample Unity Catalog datasets and Databricks datasets are not available when back-end Private Service Connect is configured. See Sample datasets.

Multiple options for network topology

You can deploy a private Databricks workspace with the following network configuration options:

Host Databricks users (clients) and the Databricks classic compute plane on the same network: In this option, the transit VPC and compute plane VPC refer to the same underlying VPC network. If you choose this topology, all access to any Databricks workspace from that VPC must go over the front-end Private Service Connect connection for that VPC. See Requirements and limitations.

Host Databricks users (clients) and the Databricks classic compute plane on separate networks: In this option, the user or application client can access different Databricks workspaces using different network paths. You can optionally allow a user on the transit VPC to access a private workspace over a Private Service Connect connection and also allow users on the public internet to the workspace.

Host compute plane for multiple Databricks workspaces on the same network: In this option, the compute plane VPC for multiple Databricks workspaces refer to the same underlying VPC network. All such workspaces must share the same back-end Private Service Connect endpoint. This deployment pattern can allow you to configure a smaller number of Private Service Connect endpoints while configuring a large number of workspaces.

You can share one transit VPC for multiple workspaces. However, each transit VPC must contain only workspaces that use front-end PSC, or only workspaces that do not use front-end PSC. Due to the way DNS resolution works on Google Cloud, you cannot use both types of workspaces with a single transit VPC.

Reference architecture

A Databricks workspace deployment includes the following network paths that you can secure:

Databricks client on your transit VPC to the Databricks control plane. This includes both the web application and REST API access.

Databricks compute plane VPC network to the Databricks control plane service. This includes the secure cluster connectivity relay and the workspace connection for the REST API endpoints.

Databricks compute plane to storage in a Databricks-managed project.

Databricks compute plane VPC network to the GKE API server.

Databricks control plane to storage in your projects including the DBFS bucket.

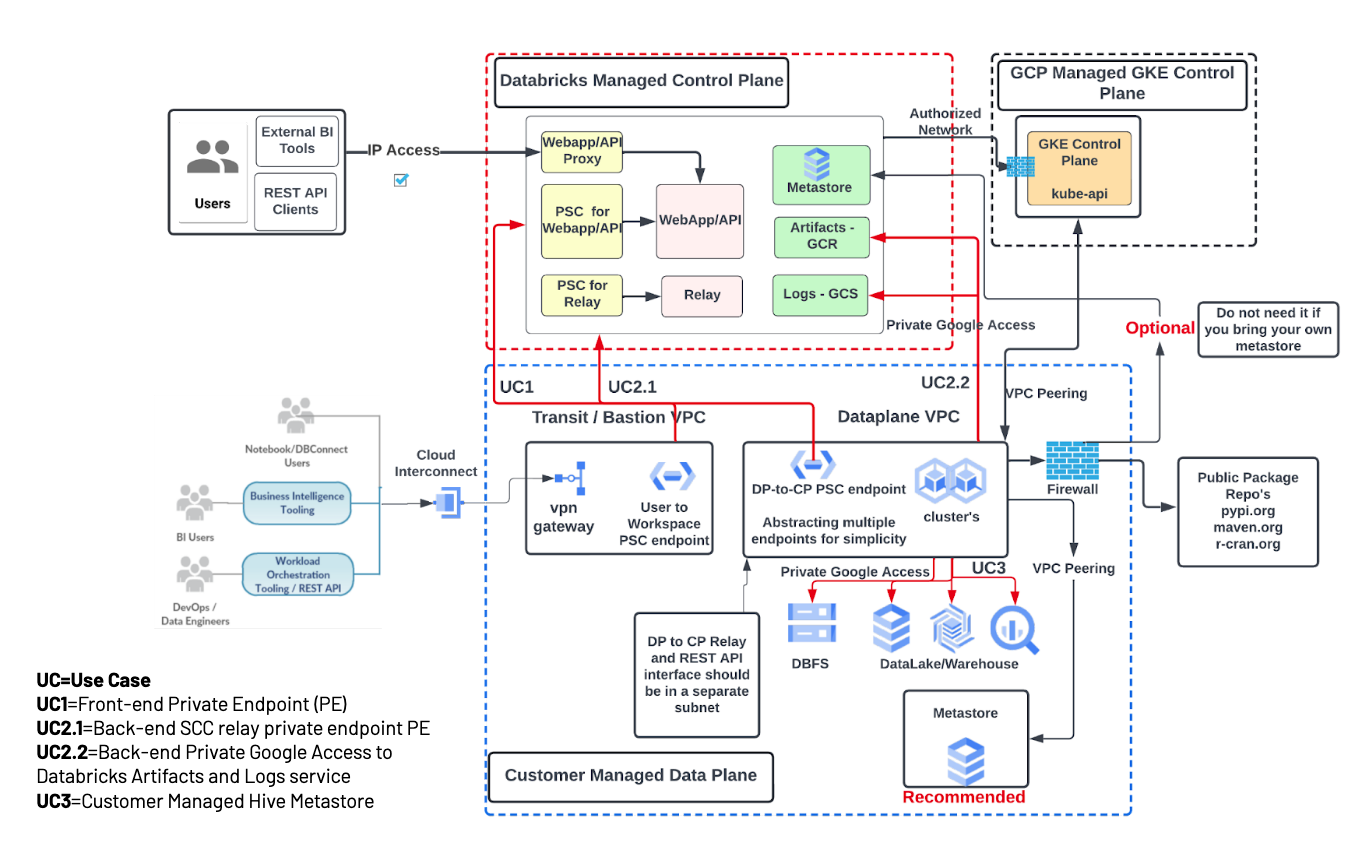

It’s possible to have a no-firewall architecture to restrict outbound traffic, ideally using an external metastore. Outbound traffic to a public library repository is not possible by default, but you can bring your own locally mirrored package repo. The following diagram shows a network architecture for a full (front-end and back-end) Private Service Connect deployment with no firewalls:

You can also use a firewall architecture and allow egress to public package repos and the (optional) Databricks-managed metastore. The following diagram shows a network architecture for a full (front-end and back-end) Private Service Connect deployment with a firewall for egress control:

Regional service attachments reference

To enable Private Service Connect, you need the service attachment URIs for the following endpoints for your region:

The workspace endpoint. This ends with the suffix

plproxy-psc-endpoint-all-ports. This has a dual role. This is used by by back-end Private Service Connect to connect to the control plane for REST APIs. This is also used by front-end Private Service Connect to connect your transit VPC to the workspace web application and REST APIs.The secure cluster connectivity (SCC) relay endpoint. This ends with the suffix

ngrok-psc-endpoint. This is used only for back-end Private Service Connect. It is used to connect to the control plane for the secure cluster connectivity (SCC) relay.

To get the workspace endpoint and SCC relay endpoint service attachment URIs for your region, see Private Service Connect (PSC) attachment URIs and project numbers.

Step 1: Enable your account for Private Service Connect

Before Databricks can accept Private Service Connect connections from your Google Cloud projects, you must contact your Databricks account team and provide the following information for each workspace where you want to enable Private Service Connect:

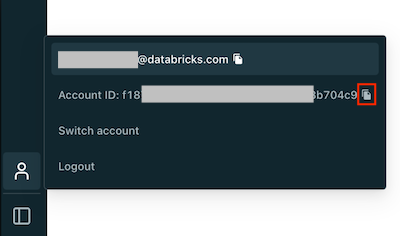

Databricks account ID

As an account admin, go to the Databricks account console.

At the bottom of the left menu (you might need to scroll), click on the User button (the person icon).

In the popup that appears, copy the account ID by clicking the icon to the right of the ID.

VPC Host Project ID of the compute plane VPC, if you are enabling back-end Private Service Connect

VPC Host Project ID of the transit VPC, if you are enabling front-end Private Service Connect

Region of the workspace

Important

A Databricks representative responds with a confirmation once Databricks is configured to accept Private Service Connect connections from your Google Cloud projects. This can take up to three business days.

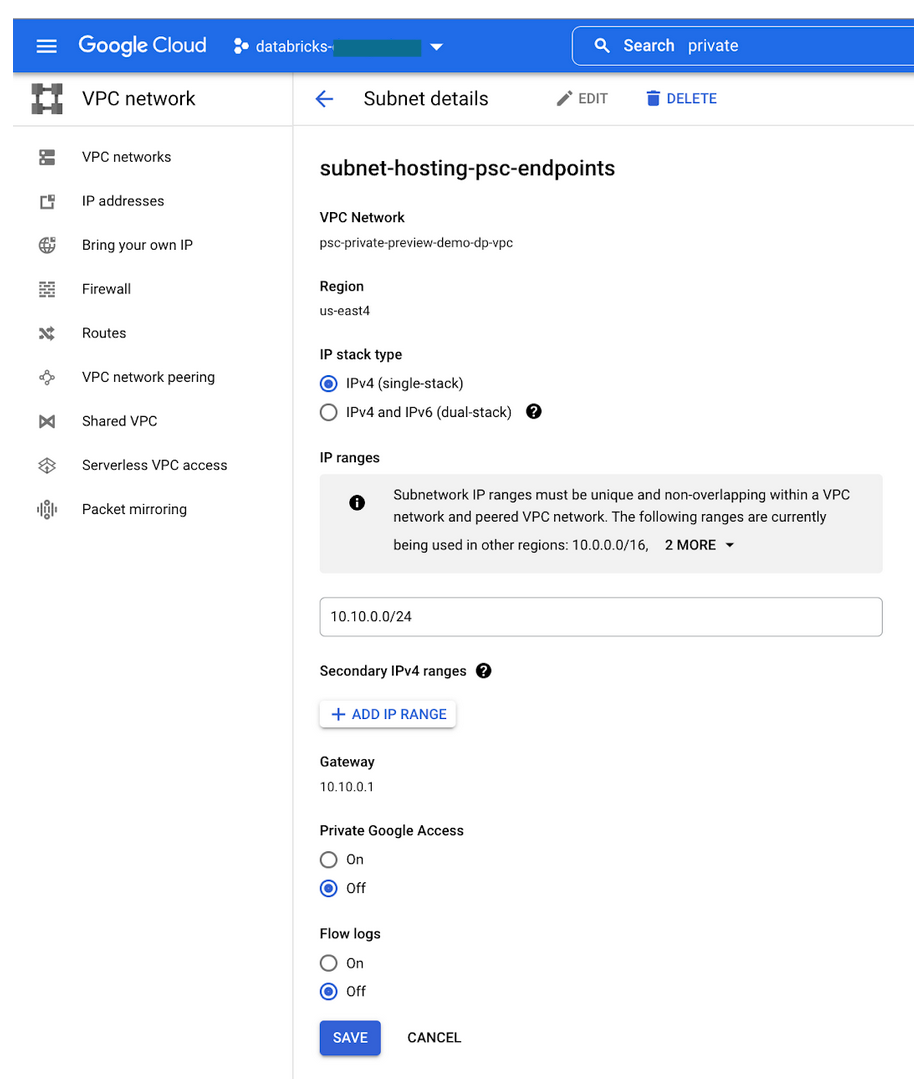

Step 2: Create a subnet

In the compute plane VPC network, create a subnet specifically for Private Service Connect endpoints. The following instructions assume use of the Google Cloud console, but you can also use the gcloud CLI to perform similar tasks.

To create a subnet:

In the Google Cloud cloud console, go to the VPC list page.

Click Add subnet.

Set the name, description, and region.

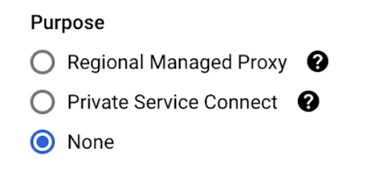

If the Purpose field is visible (it might not be visible), choose None:

Set a private IP range for the subnet, such as

10.0.0.0/24.Important

Your IP ranges cannot overlap for any of the following:

Subnet of BYO VPC, secondary IPv4 ranges.

Subnet that holds the Private Service Connect endpoints.

GKE cluster IP range, which is a field when you create the Databricks workspace.

The page looks generally like the following:

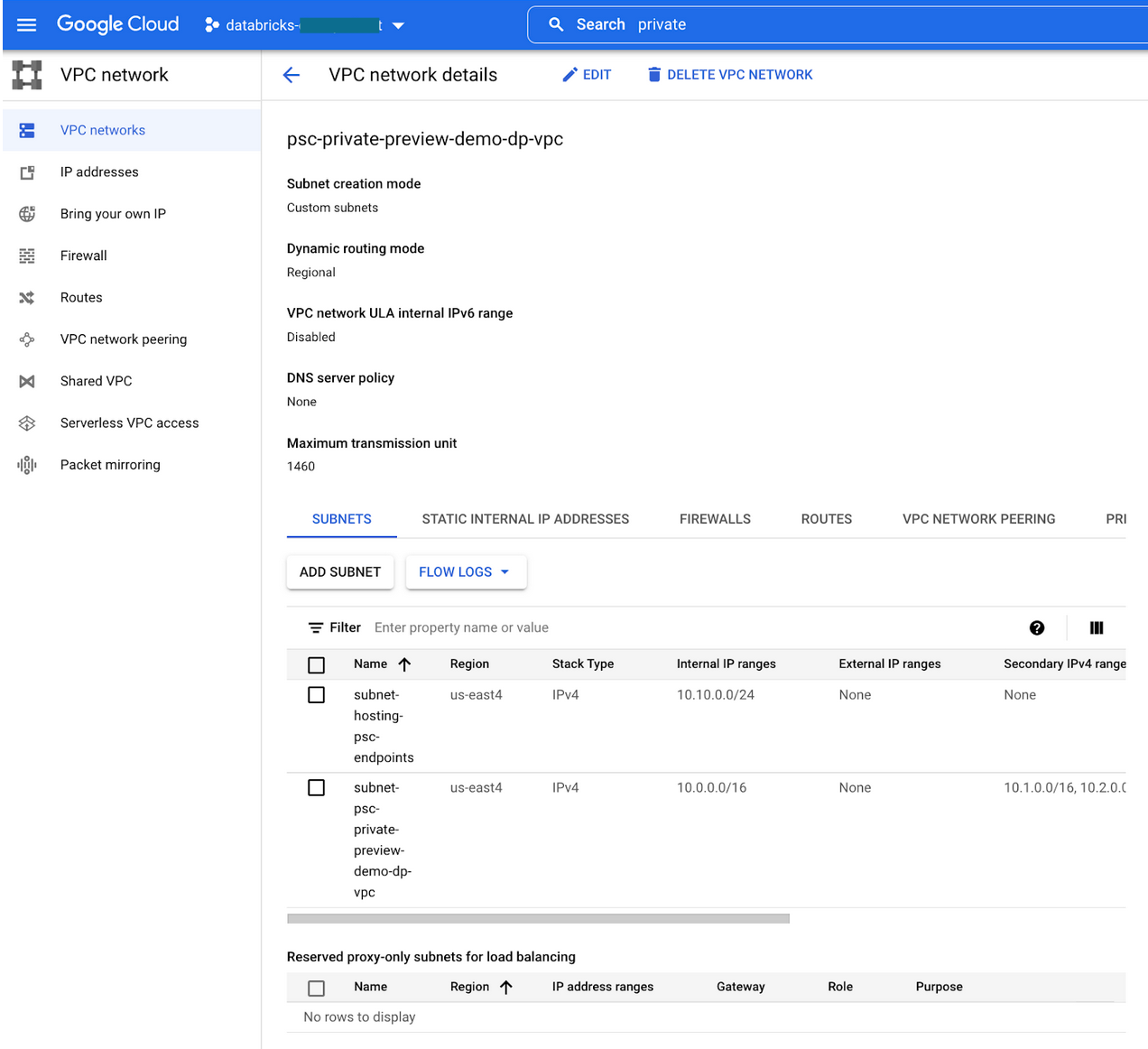

Confirm that your subnet was added to the VPC view in the Google Cloud console for your VPC:

Step 3: Create VPC endpoints

You need to create VPC endpoints that connect to Databricks service attachments. The service attachments URLs vary by workspace region. The following instructions assume use of the Google Cloud console, but you can also use the gcloud CLI to perform similar tasks. For instructions on creating VPC endpoints to the service attachments by using the gcloud CLI or API, see the Google article “Create a Private Service Connect Endpoint”.

On the subnet that you created, create VPC endpoints to the following service attachments from your compute plane VPC:

The workspace endpoint. This ends with the suffix

plproxy-psc-endpoint-all-ports.The secure cluster connectivity relay endpoint. This ends with the suffix

ngrok-psc-endpoint

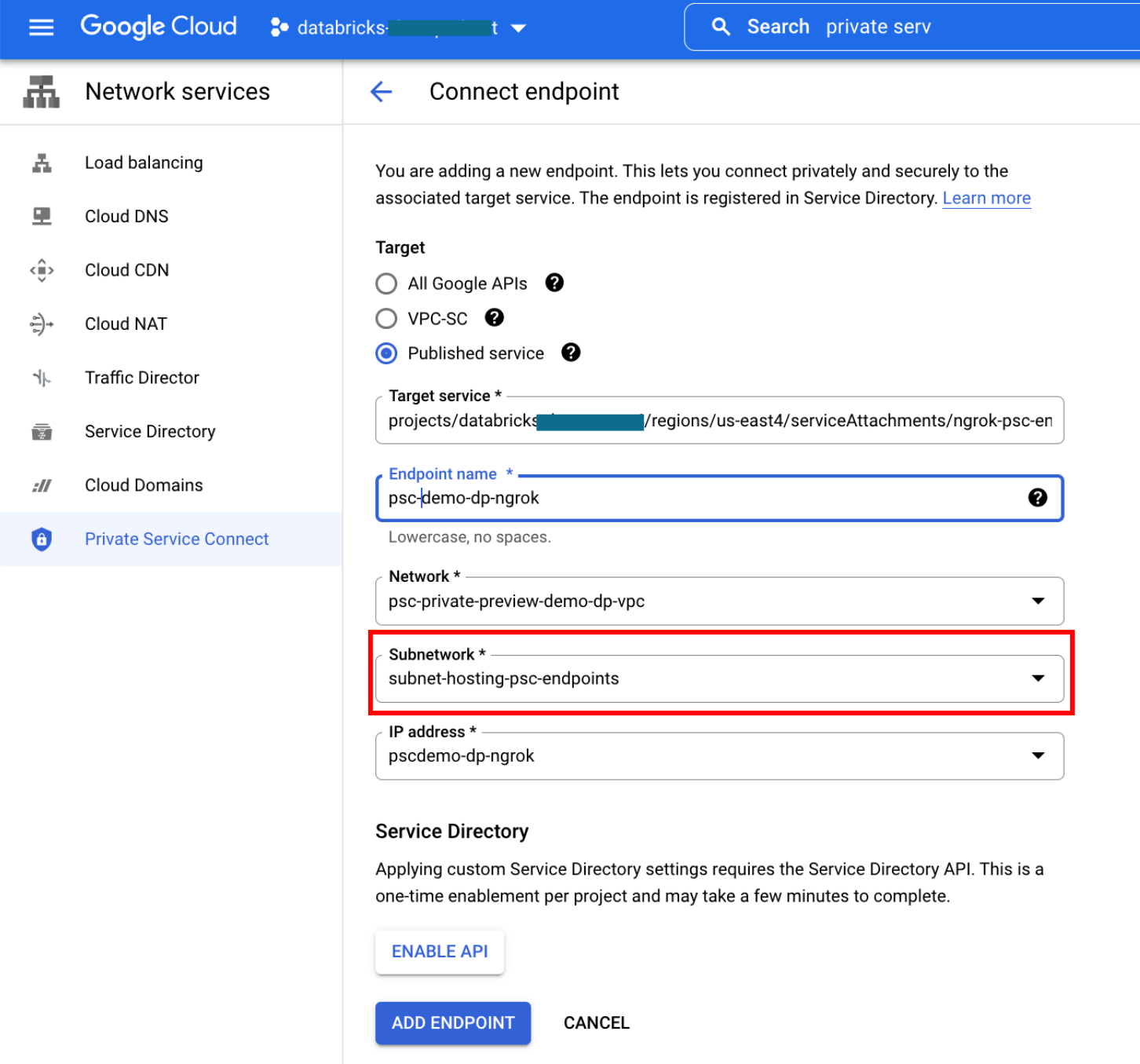

To create a VPC endpoint in Google Cloud console:

In the Google Cloud console, go to Private Service Connect.

Click the CONNECTED ENDPOINTS tab.

Click + Connect endpoint.

For Target, select Published service.

For Target service, enter the service attachment URI.

Important

See the table in Regional service attachments reference to get the two Databricks service attachment URIs for your workspace region.

For the endpoint name, enter a name to use for the endpoint.

Select a VPC network for the endpoint.

Select a subnet for the endpoint. Specify the subnet that you created for Private Service Connect endpoints.

Select an IP address for the endpoint. If you need a new IP address:

Click the IP address drop-down menu and select Create IP address.

Enter a name and optional description.

For a static IP address, select Assign automatically or Let me choose.

If you selected Let me choose, enter the custom IP address.

Click Reserve.

Select a namespace from the drop-down list or create a new namespace. The region is populated based on the selected subnetwork.

Click Add endpoint.

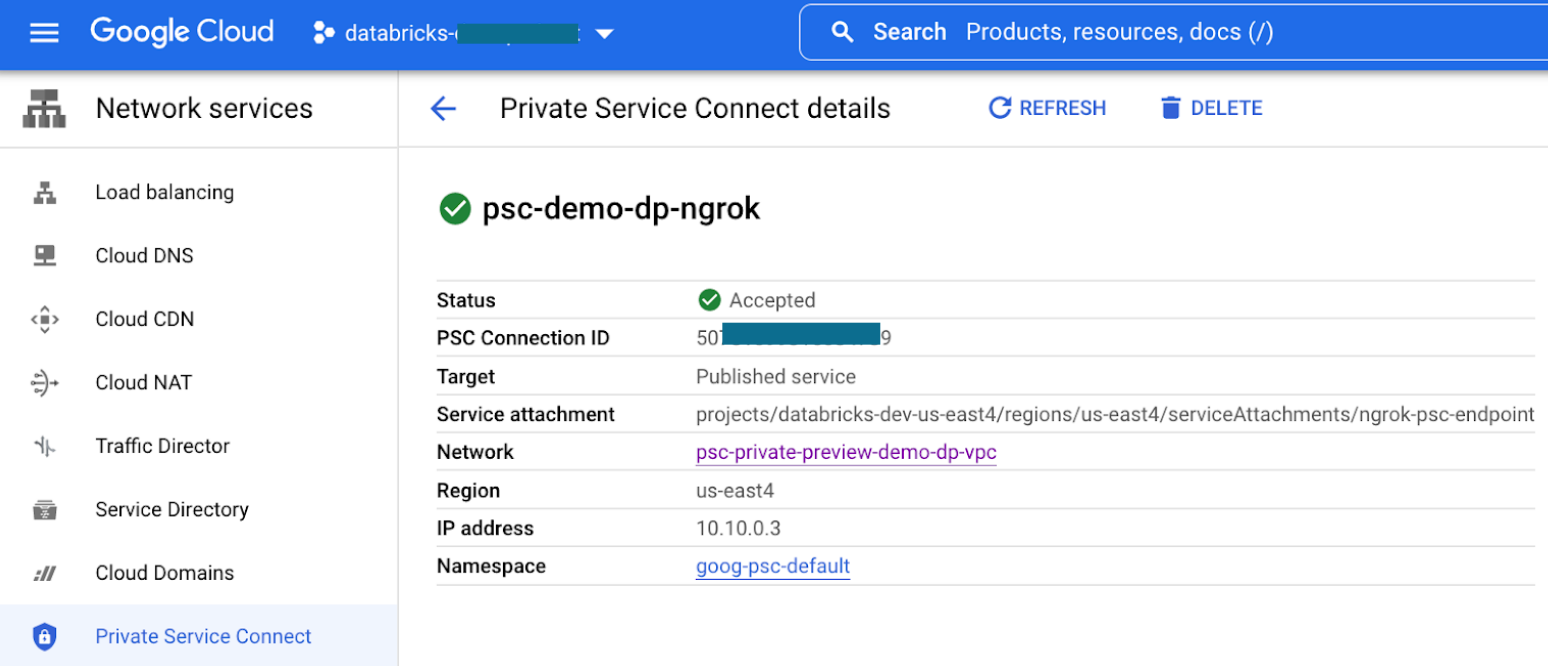

The endpoint from the compute plane VPC to the workspace service attachment URI looks like this:

The endpoint from the compute plane VPC to the workspace service attachment URI looks like this:

Step 4: Configure front-end private access

To configure private access from Databricks clients for front-end Private Service Connect:

Create a transit VPC network or reuse an existing one.

Create or reuse a subnet with a private IP range that has access to the front-end Private Service Connect endpoint.

Important

Ensure that your users have access to VMs or devices on that subnet.

Create a VPC endpoint from the transit VPC to the workspace (

plproxy-psc-endpoint-all-ports) service attachment.To get the full name to use for your region, see Private Service Connect (PSC) attachment URIs and project numbers .

The form in Google Cloud console for this endpoint looks generally like the following:

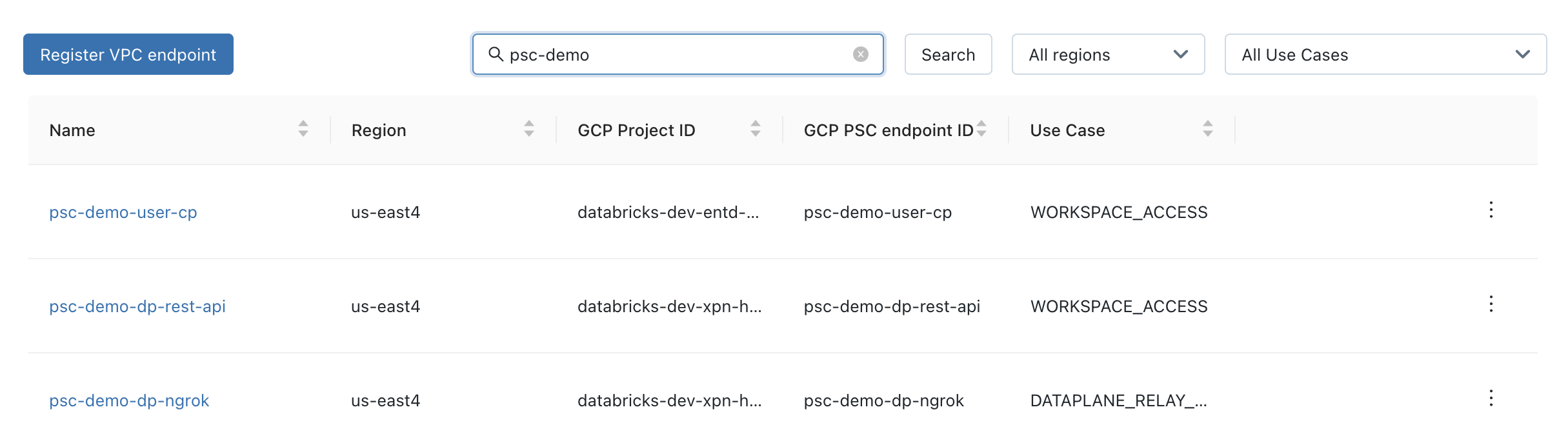

Step 5: Register your VPC endpoints

Use the account console

Register your Google Cloud endpoints using the Databricks account console.

Go to the Databricks account console.

Click the Cloud resources tab, then VPC endpoints.

Click Register VPC endpoint.

For each of your Private Service Connect endpoints, fill in the required fields to register a new VPC endpoint:

VPC endpoint name: A human readable name to identify the VPC endpoint. Databricks recommends using the same as your Private Service Connect endpoint ID, but it is not required that these match.

Region: The Google Cloud region where this Private Service Connect endpoint is defined.

Google Cloud VPC network project ID: The Google Cloud project ID where this endpoint is defined. For back-end connectivity, this is the project ID for your workspace’s VPC network. For front-end connectivity, this is the project ID of the VPC where user connections originate, which is sometimes referred to as a transit VPC.

The following table shows what information you need to use for each endpoint if you are using both back-end and front-end Private Service Connect.

Endpoint type |

Field |

Example |

|---|---|---|

Front-end transit VPC endpoint ( |

VPC endpoint name (Databricks recommends matching the Google Cloud endpoint ID) |

|

Google Cloud VPC network project ID |

|

|

Google Cloud Region |

|

|

Back-end compute plane VPC REST/workspace endpoint ( |

VPC endpoint name (Databricks recommends matching the Google Cloud endpoint ID) |

|

Google Cloud VPC network project ID |

|

|

Google Cloud Region |

|

|

Back-end compute plane VPC SCC relay endpoint ( |

VPC endpoint name (Databricks recommends matching the Google Cloud endpoint ID) |

|

Google Cloud VPC network project ID |

|

|

Google Cloud Region |

|

When you are done, you can use the VPC endpoints list in the account console to review the list of endpoints and confirm the information. It would look generally like this:

Use the API

For API reference information, see the API Reference docs, particularly for VPC endpoints.

To register a VPC endpoint using a REST API:

Create a Google ID token. Follow the instructions on Authentication with Google ID tokens for account-level APIs. The API to register a VPC endpoint requires a Google access token, which is in addition to the Google ID.

Using

curlor another REST API client, make aPOSTrequest to theaccounts.gcp.databricks.comserver and call the/accounts/<account-id>/vpc-endpointsendpoint. The request arguments are as follows:Parameter

Description

vpc_endpoint_nameHuman-readable name for the registered endpoint

gcp_vpc_endpoint_infoDetails of the endpoint, as a JSON object with the following fields:

project_id: Project IDpsc_endpoint_name: PSC endpoint nameendpoint_region: Google Cloud region for the endpoint

Review the response JSON. This returns an object that is similar to the request payload but the response has a few additional fields. The response fields are:

Parameter

Description

vpc_endpoint_nameHuman-readable name for the registered endpoint

account_idDatabricks account ID

use_caseWORKSPACE_ACCESSgcp_vpc_endpoint_infoDetails of the endpoint, as a JSON object with the following fields:

project_id: Project IDpsc_endpoint_name: PSC endpoint nameendpoint_region: Google Cloud region for the endpointpsc_connection_id: PSC connection IDservice_attachment_id: PSC service attachment ID

The following curl example adds the additional required Google access token HTTP header and registers a VPC endpoint:

curl \

-X POST \

--header 'Authorization: Bearer <google-id-token>' \

--header 'X-Databricks-GCP-SA-Access-Token: <access-token-sa-2>' \

https://accounts.gcp.databricks.com/api/2.0/accounts/<account-id>/vpc-endpoints

-H "Content-Type: application/json"

-d '{"vpc_endpoint_name": "psc-demo-dp-rest-api",

"gcp_vpc_endpoint_info": {

"project_id": "databricks-dev-xpn-host",

"psc_endpoint_name": "psc-demo-dp-rest-api",

"endpoint_region": "us-east4"

}'

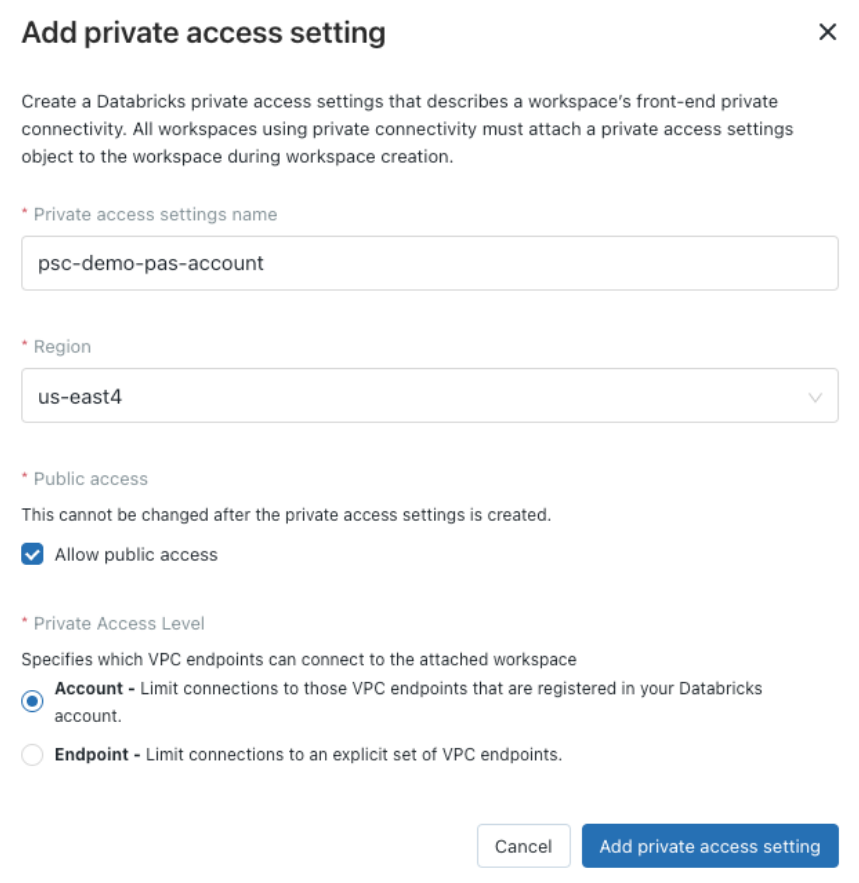

Step 6: Create a Databricks private access settings object

Create a private access settings object, which defines several Private Service Connect settings for your workspace. This object will be attached to your workspace. One private access settings object can be attached to multiple workspaces.

Use the account console

Create a Databricks private access settings object using the Databricks account console:

Go to the Databricks account console.

Click the Cloud resources tab, then Private Access Settings.

Click Add private access setting.

Set required fields:

Private access settings name: Human readable name to identify this private access settings object.

Region: The region of the connections between VPC endpoints and the workspaces that this private access settings object configures.

Public access enabled: Specify if public access is allowed. Choose this value carefully because it cannot be changed after the private access settings object is created. Note: In both cases, IP access lists cannot block private traffic from Private Service Connect because the access lists only control access from the public internet.

If public access is enabled, users can configure the IP access lists to allow/block public access (from the public internet) to the workspaces that use this private access settings object.

If public access is disabled, no public traffic can access the workspaces that use this private access settings object. The IP access lists do not affect public access.

Private access level: A specification to restrict access to only authorized Private Service Connect connections. It can be one of the below values:

Account: Any VPC endpoints registered with your Databricks account can access this workspace. This is the default value.

Endpoint: Only the VPC endpoints that you specify explicitly can access the workspace. If you choose this value, you can choose from among your registered VPC endpoints.

Use the API

For API reference information, see the API Reference docs, particularly for private access settings.

To create Databricks private access settings object using a REST API:

If you have not done it already, or if your token has expired, create a Google ID token. Follow the instructions on Authentication with Google ID tokens for account-level APIs. The API to create a private settings object does not require a Google access token, which is required for some APIs. You need a Google access token for other steps but not for this step.

Using

curlor another REST API client, make aPOSTrequest to theaccounts.gcp.databricks.comserver and call the/accounts/<account-id>/private-access-settingsendpoint. The request arguments are as follows:Parameter

Description

private_access_settings_nameHuman-readable name for the private access settings object

regionGoogle Cloud region for the private access settings object

private_access_levelDefines which VPC endpoints the workspace accepts:

ACCOUNT: The workspace accepts only VPC endpoints registered with the workspace’s Databricks account. This is the default value if omitted.ENDPOINT: The workspace accepts only VPC endpoints explicitly listed by ID in the separateallowed_vpc_endpointsfield.

allowed_vpc_endpointsArray of VPC endpoint IDs to allow list. Only used if

private_access_levelisENDPOINT.public_access_enabledSpecify if public access is allowed. Choose this value carefully because it cannot be changed after the private access settings object is created.

If public access is enabled, users can configure the IP access lists to allow/block public access (from the public internet) to the workspaces that use this private access settings object.

If public access is disabled, no public traffic can access the workspaces that use this private access settings object. The IP access lists do not affect public access.

Note

In both cases, IP access lists cannot block private traffic from Private Service Connect because the access lists only control access from the public internet. Only VPC endpoints defined in

allowed_vpc_endpointscan access your workspace.Review the response. This returns an object that is similar to the request object but has additional fields:

account_id: The Databricks account ID.private_access_settings_id: The private access settings object ID.

For example:

curl \

-X POST \

--header 'Authorization: Bearer <google-id-token>' \

https://accounts.gcp.databricks.com/api/2.0/accounts/<account-id>/private-access-settings

-H "Content-Type: application/json"

-d '{

"private_access_settings_name": "psc-demo-pas-account",

"region": "us-east4",

"private_access_level": "ACCOUNT",

"public_access_enabled": true,

}'

This generates a response similar to:

{

"private_access_settings_id": "999999999-95af-4abc-ab7c-b590193a9c74",

"account_id": "<real account id>",

"private_access_settings_name": "psc-demo-pas-account",

"region": "us-east4",

"public_access_enabled": true,

"private_access_level": "ACCOUNT"

}

Tip

If you want to review the set of private access settings objects using the API, make a GET request to the https://accounts.gcp.databricks.com/api/2.0/accounts/<account-id>/private-access-settings endpoint.

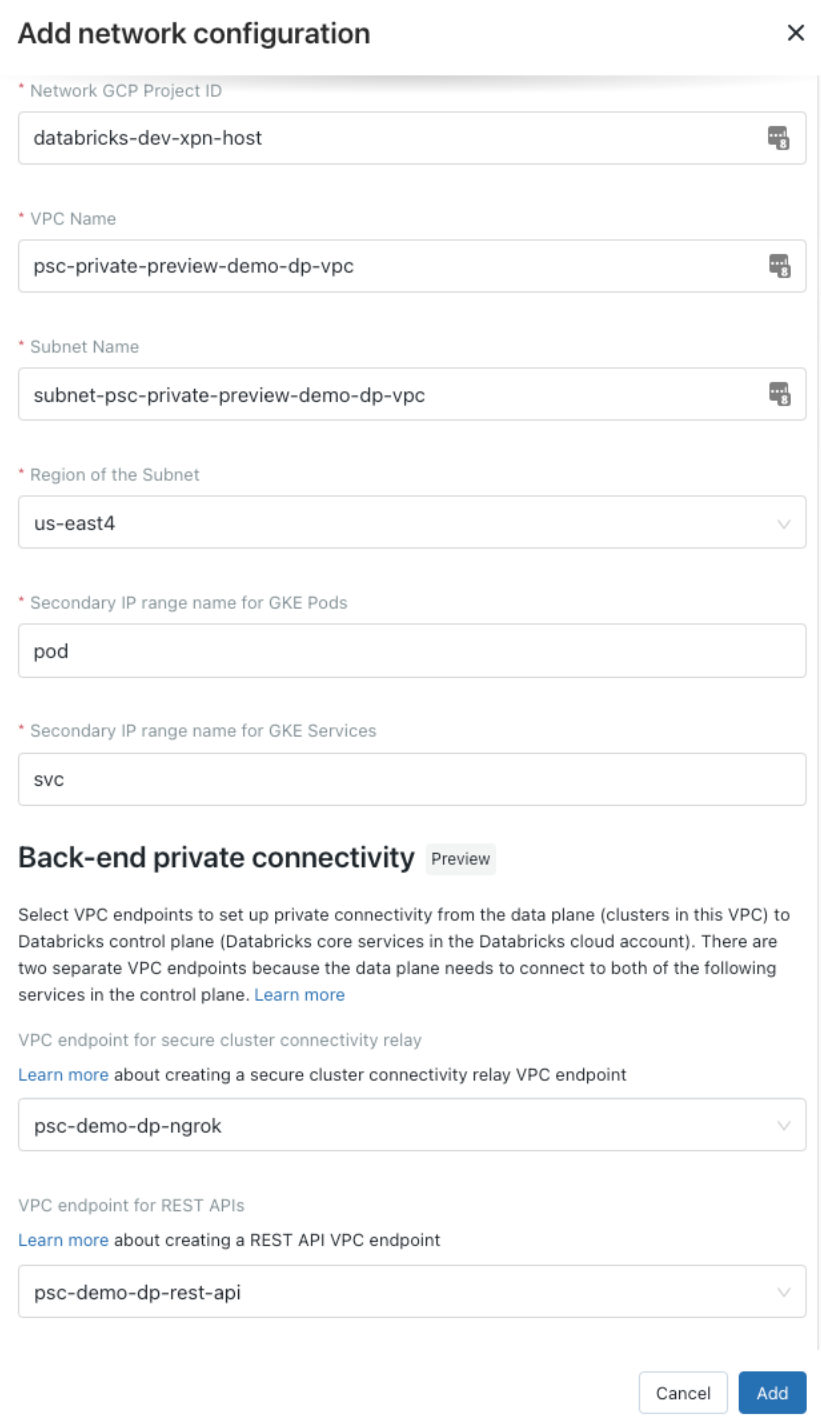

Step 7: Create a network configuration

Create a Databricks network configuration, which encapsulates information about your customer-managed VPC for your workspace. This object will be attached to your workspace.

Use the account console

To create a network configuration using the account console:

If you have not already created your VPC for your workspace, do that now.

Go to the Databricks account console.

Click the Cloud resources tab, then Network configurations.

Click Add Network configuration.

Field |

Example value |

|---|---|

Network configuration name |

|

Network GCP project ID |

|

VPC Name |

|

Subnet Name |

|

Region of the subnet |

|

Secondary IP range name for GKE Pods |

|

Secondary IP range name for GKE Services |

|

VPC endpoint for secure cluster connectivity relay |

|

VPC endpoint for REST APIs (back-end connection to workspace) |

|

Use the API

For API reference information, see the API Reference docs, particularly for networks.

To create Databricks network configuration object using a REST API:

If you have not already created your VPC for your workspace, do that now.

If you have not done it already, or if your token has expired, create a Google ID token. Follow the instructions on Authentication with Google ID tokens for account-level APIs. Creating a network configuration does require a Google access token, which is in addition to the Google ID.

Using

curlor another REST API client, make aPOSTrequest to theaccounts.gcp.databricks.comserver and call the/accounts/<account-id>/networksendpoint.Review the required and optional arguments for the create network API. See the documentation for the create network configuration operation for the Account API. The set of arguments for that operation are not be duplicated below.

For the Private Service Connect support, in the request JSON you must add the argument

vpc_endpoints. It lists the registered endpoints by their Databricks IDs and separated into properties for the different uses cases. Endpoints are defined as arrays but you provide no more than one VPC endpoint in each in array. The two fields arerest_api: The VPC endpoint for the workspace connection, which is used by the classic compute plane to call REST APIs on the control plane.dataplane_relay: The VPC endpoint for the secure cluster connectivity relay connection.

For example:

"vpc_endpoints": { "rest_api": [ "63d375c1-3ed8-403b-9a3d-a648732c88e1" ], "dataplane_relay": [ "d76a5c4a-0451-4b19-a4a8-b3df93833a26" ] },

Review the response. This returns an object that is similar to the request object but has the following additional field:

account_id: The Databricks account ID.

The following curl example adds the additional required Google access token HTTP header and creates a network configuration:

curl \

-X POST \

--header 'Authorization: Bearer <google-id-token>' \

--header 'X-Databricks-GCP-SA-Access-Token: <access-token-sa-2>' \

https://accounts.gcp.databricks.com/api/2.0/accounts/<account-id>/networks

-H "Content-Type: application/json"

-d '{

"network_name": "psc-demo-network",

"gcp_network_info": {

"network_project_id": "databricks-dev-xpn-host",

"vpc_id": "psc-demo-dp-vpc",

"subnet_id": "subnet-psc-demo-dp-vpc",

"subnet_region": "us-east4",

"pod_ip_range_name": "pod",

"service_ip_range_name": "svc"

},

"vpc_endpoints": {

"rest_api": [

"9999999-3ed8-403b-9a3d-a648732c88e1"

],

"dataplane_relay": [

"9999999-0451-4b19-a4a8-b3df93833a26"

]

}

}'

This generates a response similar to:

{

"network_id": "b039f04c-9b72-4973-8b04-97cf8defb1d7",

"account_id": "<real account id>",

"vpc_status": "UNATTACHED",

"network_name": "psc-demo-network",

"creation_time": 1658445719081,

"vpc_endpoints": {

"rest_api": [

"63d375c1-3ed8-403b-9a3d-a648732c88e1"

],

"dataplane_relay": [

"d76a5c4a-0451-4b19-a4a8-b3df93833a26"

]

},

"gcp_network_info": {

"network_project_id": "databricks-dev-xpn-host",

"vpc_id": "psc-demo-dp-vpc",

"subnet_id": "subnet-psc-demo-dp-vpc",

"subnet_region": "us-east4",

"pod_ip_range_name": "pod",

"service_ip_range_name": "svc"

}

}

Tip

If you want to review the set of network configuration objects using the API, make a GET request to the https://accounts.gcp.databricks.com/api/2.0/accounts/<account-id>/networks endpoint.

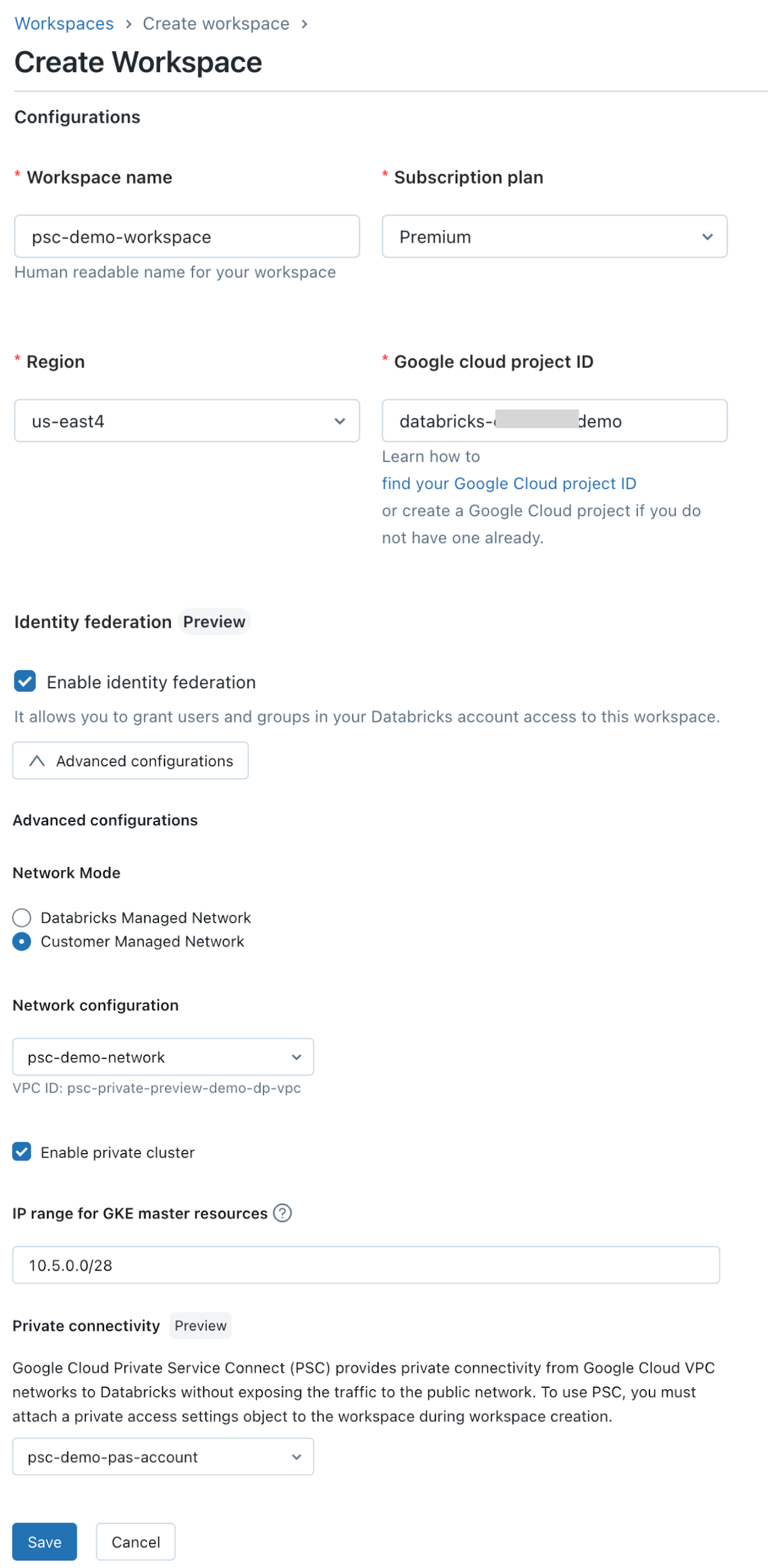

Step 8: Create a workspace

Create a workspace using the network configuration that you created.

Use the account console

To create a workspace with the account console:

Go to the Databricks account console.

Click the Workspaces tab.

Click Create workspace.

Set these standard workspace fields:

Workspace name

Region

Google cloud project ID (the project for the workspace’s compute resources, which may be different than the project ID for your VPC).

Ensure that Enable private cluster is checked.

IP range for GKE master resources

Set Private Service Connect specific fields:

Click Advanced configurations.

In the Network configuration field, choose your network configuration that you created in previous steps.

In the Private connectivity field, choose your private access settings object that you created in previous steps. Note that one private access settings object can be attached to multiple workspaces.

Click Save.

Use the API

For API reference information, see the API Reference docs, particularly for workspaces.

To create Databricks workspace using a REST API:

If you have not done it already, or if your token has expired, create a Google ID token. Follow the instructions on Authentication with Google ID tokens. It is important to note about following instructions on that page:

The API to register a VPC endpoint is an account-level API.

The API to register a VPC endpoint does require a Google access token, which is mentioned as required for some APIs.

Using

curlor another REST API client, make aPOSTrequest to theaccounts.gcp.databricks.comserver and call the/accounts/<account-id>/workspacesendpoint.Review the required and optional arguments for the create workspace API. See the documentation for the create workspace operation for the Account API. The set of arguments for that operation are not duplicated here.

For the Private Service Connect support, in the request JSON you must add the argument

private_access_settings_id. Set it to the Databricks ID for the private settings object that you created. The ID was in the response fieldprivate_access_settings_id.

Review the response. This returns an object that is similar to the request object but has additional fields. See the documentation for the create workspace operation for the Account API and click on response code 201 (

Success). The set of fields in the response are not duplicated below.

The following curl example adds the additional required Google access token HTTP header and creates a workspace:

curl \

-X POST \

--header 'Authorization: Bearer <google-id-token>' \

--header 'X-Databricks-GCP-SA-Access-Token: <access-token-sa-2>' \

https://accounts.gcp.databricks.com/api/2.0/accounts/<account-id>/workspaces

-H "Content-Type: application/json"

-d '{

"workspace_name" : "psc-demo-workspace",

"pricing_tier" : "PREMIUM",

"cloud_resource_container": {

"gcp": {

"project_id": "example-project"

}

},

"location": "us-east4",

"private_access_settings_id": "9999999-95af-4abc-ab7c-b590193a9c74",

"network_id": "9999999-9b72-4973-8b04-97cf8defb1d7",

"gke_config": {

"gke_connectivity_type": "PRIVATE_NODE_PUBLIC_MASTER",

"gke_cluster_master_ip_range": "10.5.0.0/28"

}

}'

This generates a response similar to:

{

"workspace_id":999997997552291,

"workspace_name":"ps-demo-workspace",

"creation_time":1669744259011,

"deployment_name":"7732657997552291.1",

"workspace_status":"RUNNING",

"account_id":"<real account id>",

"workspace_status_message":"Workspace is running.",

"pricing_tier":"PREMIUM",

"private_access_settings_id":"93b1ba70-95af-4abc-ab7c-b590193a9c74",

"location":"us-east4",

"cloud":"gcp",

"network_id": "b039f04c-9b72-4973-8b04-97cf8defb1d7",

"gke_config":{

"connectivity_type":"PRIVATE_NODE_PUBLIC_MASTER",

"master_ip_range":"10.5.0.0/28"

},

"cloud_resource_container":{

"gcp":{

"Project_id":"example-project"

}

}

}

Tip

If you want to review the set of workspaces using the API, make a GET request to the https://accounts.gcp.databricks.com/api/2.0/accounts/<account-id>/workspaces endpoint.

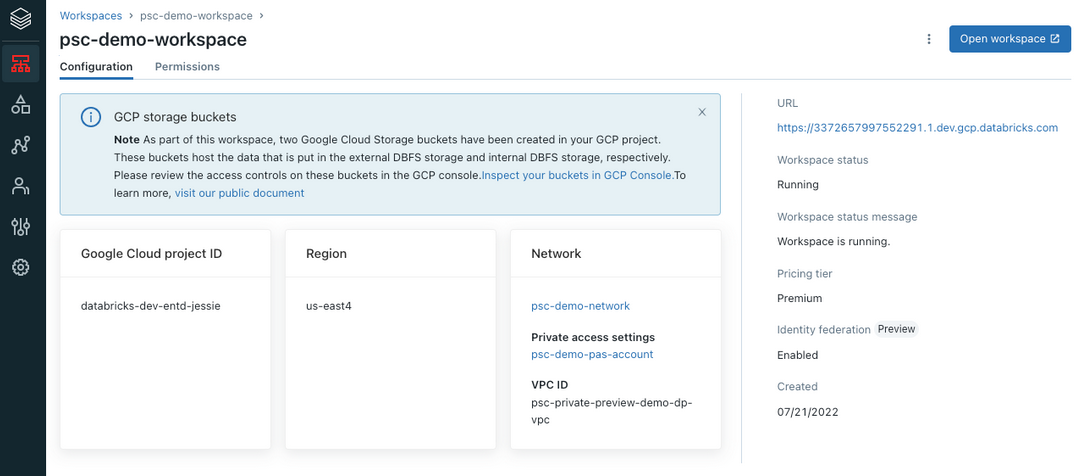

Step 9: Validate the workspace configuration

After you create the workspace, go back to the workspace page and find your newly created workspace. It typically takes between 30 seconds and 3 minutes for the workspace to transit from PROVISIONING status to RUNNING status. After the status changes to RUNNING, your workspace is configured successfully.

You can validate the configuration using the Databricks account console:

Click Cloud resources and then Network configurations. Find the network configuration for your VPC using the account console. Review it to confirm all fields are correct.

Click Workspaces and find the workspace. Confirm that the workspace is running:

Tip

If you want to review the set of workspaces using the API, make a GET request to the https://accounts.gcp.databricks.com/api/2.0/accounts/<account-id>/workspaces endpoint.

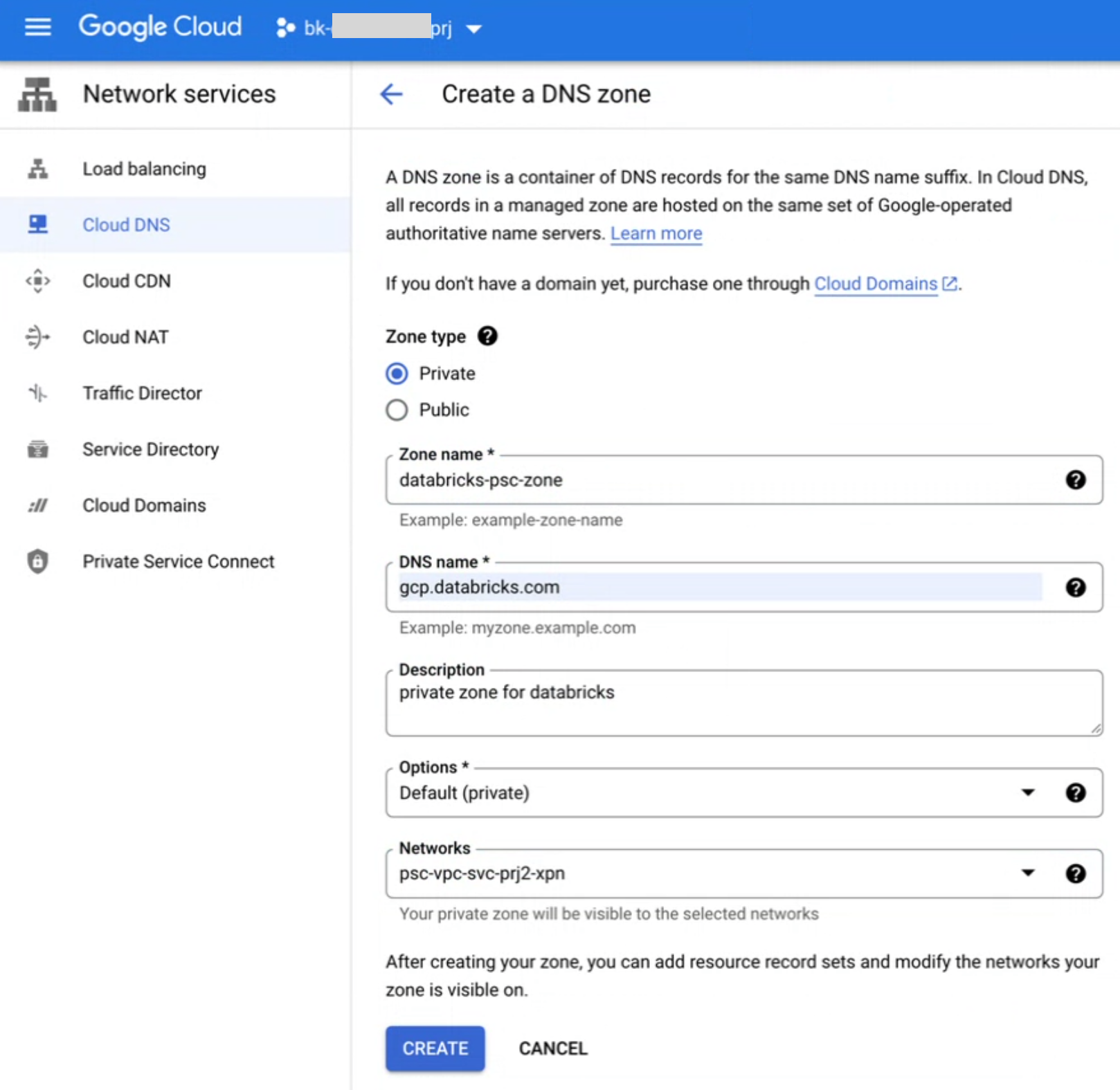

Step 10: Configure DNS

The following sections describe the separate steps of front-end and back-end DNS configuration.

Front-end DNS configuration

This section shows how to create a private DNS zone for front-end connectivity.

You can share one transit VPC for multiple workspaces. However, each transit VPC must contain only workspaces that use front-end PSC, or only workspaces that do not use front-end PSC. Due to the way DNS resolution works on Google Cloud, you cannot use both types of workspaces with a single transit VPC.

Ensure that you have your workspace URL for your deployed Databricks workspace. This has the form similar tohttps://33333333333333.3.gcp.databricks.com. You can get this URL from the web browser when you are viewing a workspace or from the account console in its list of workspaces.

Create a private DNS zone that includes the transit VPC network. Using Google Cloud Console in the Cloud DNS page, click CREATE ZONE.

In the DNS name field, type

gcp.databricks.com.In the Networks field, choose your transit VPC network.

Click CREATE.

Create DNS

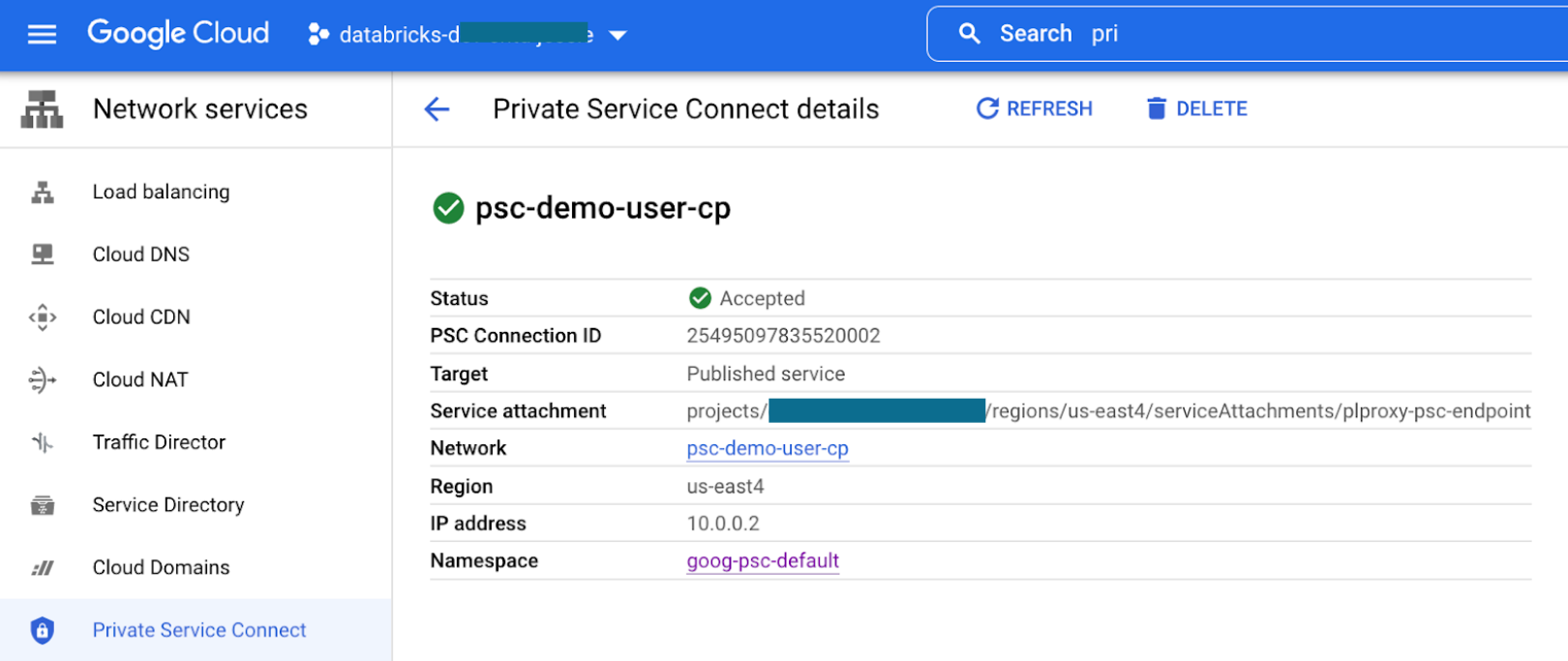

Arecords to map your workspace URL to theplproxy-psc-endpoint-all-portsPrivate Service Connect endpoint IP.Locate the Private Service Connect endpoint IP for the

plproxy-psc-endpoint-all-portsPrivate Service Connect endpoint. In this example, suppose the IP for the Private Service Connect endpointpsc-demo-user-cpis10.0.0.2.Create an

Arecord to map the workspace URL to the Private Service Connect endpoint IP. In this case, map your unique workspace domain name (such as33333333333333333.3.gcp.databricks.com) to the IP address for the Private Service Connect endpoint, which in our previous example was10.0.0.2but your number may be different.Create an

Arecord to mapdp-<workspace-url>to the Private Service Connect endpoint IP. In this case, using the example workspace URL it would mapdp-333333333333333.3.gcp.databricks.comto10.0.0.2, but those values may be different for you.

If users will use a web browser in the user VPC to access the workspace, to support authentication you must create an

Arecord to map<workspace-gcp-region>.psc-auth.gcp.databricks.comto10.0.0.2. In this case, mapus-east4.psc-auth.gcp.databricks.comto10.0.0.2. For front-end connectivity, this step typically is needed, but if you plan front-end connectivity from the transit network only for REST APIs (not web browser user access), you can omit this step.

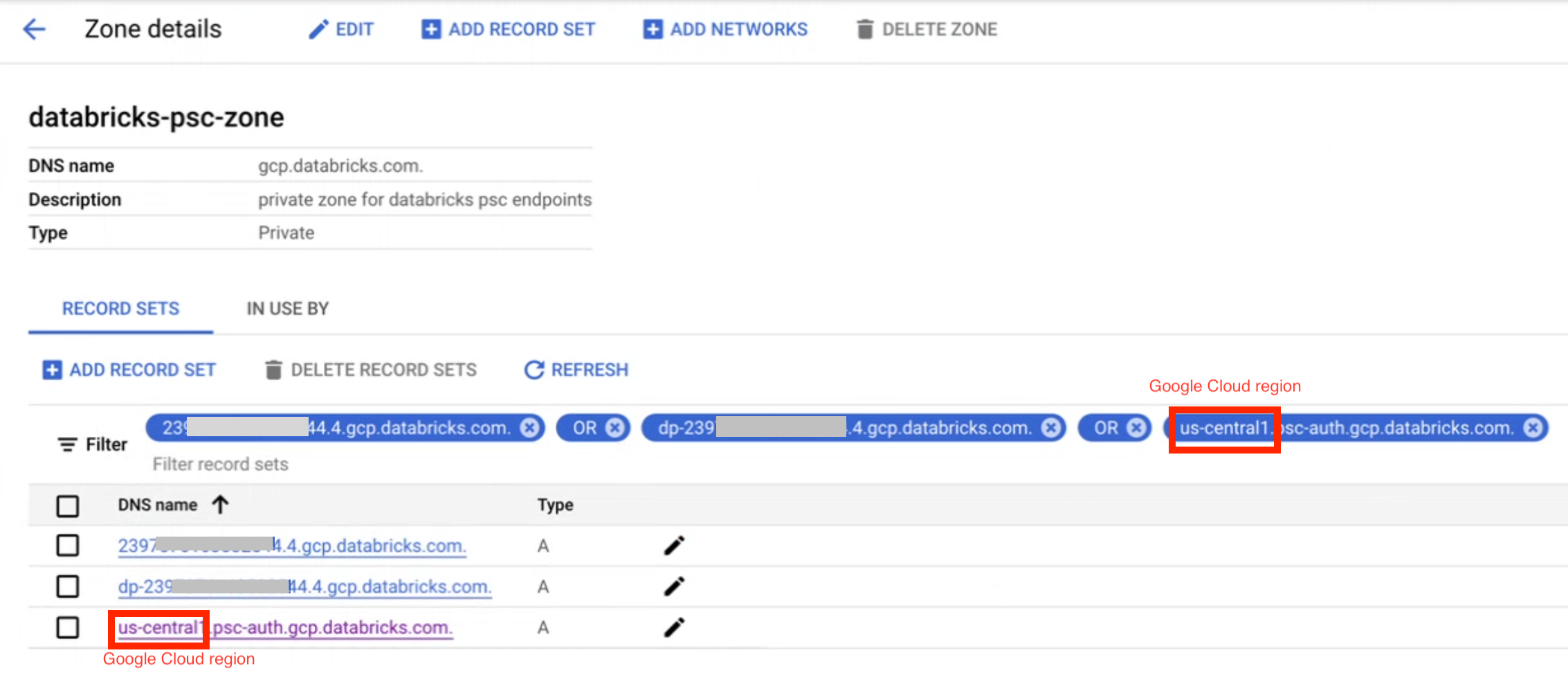

The following shows how Google Cloud console shows an accepted endpoint for front-end Private Service Connect DNS configuration:

Your zone’s front-end DNS configuration with A records that map to your workspace URL and the Databricks authentication service looks generally like the following:

Back-end DNS configuration

This section shows how to create a private DNS zone that includes the compute plane VPC network. You need to create DNS records to map both the workspace URL to the plproxy-psc-endpoint-all-ports Private Service Connect endpoint IP:

Ensure that you have your workspace URL for your deployed Databricks workspace. This has the form similar tohttps://33333333333333.3.gcp.databricks.com. You can get this URL from the web browser when you are viewing a workspace or from the account console in its list of workspaces.

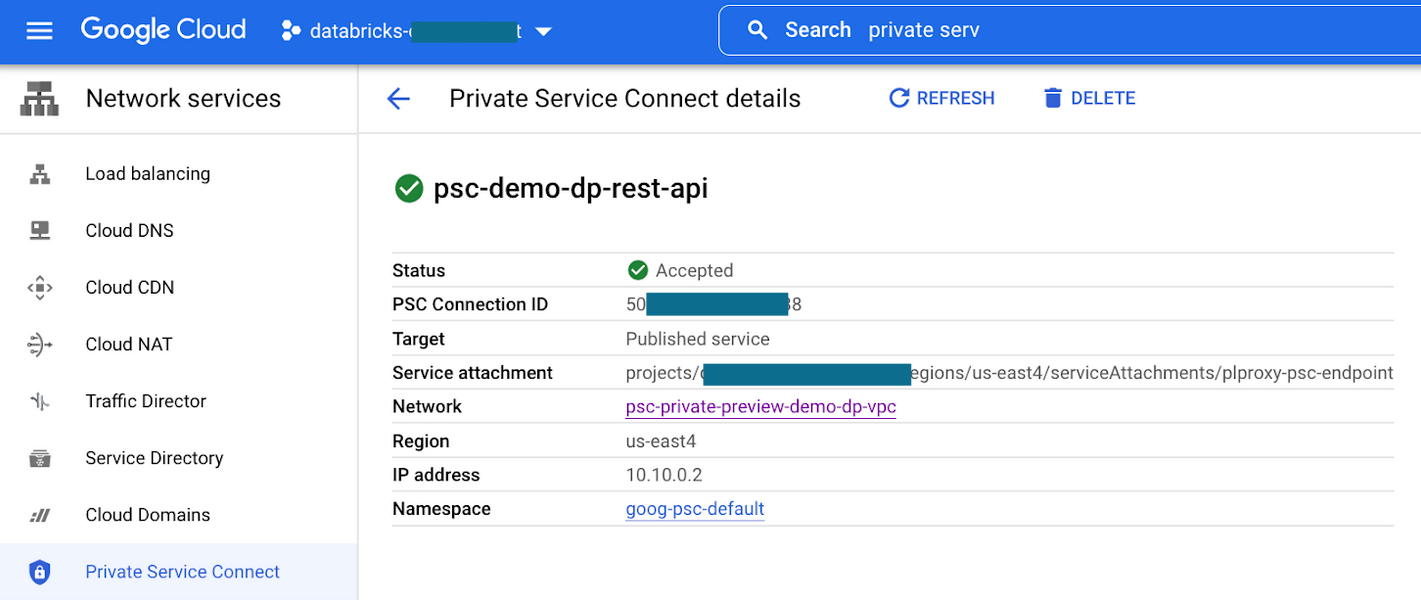

Locate the Private Service Connect endpoint IP for the

plproxy-psc-endpoint-all-portsPrivate Service Connect endpoint. Use a tool such asnslookupto get the IP address.We want to map the IP for the Private Service Connect endpoint

psc-demo-dp-rest-apito10.10.0.2.The following shows how Google Cloud console shows an accepted endpoint for back-end Private Service Connect DNS configuration:

Create the following

Arecord mappings:Your workspace domain (such as

33333333333333.3.gcp.databricks.com) to10.10.0.2Your workspace domain with prefix

dp-, such asdp-33333333333333.3.gcp.databricks.com) to10.10.0.2

In the same zone of

gcp.databricks.com, create a private DNS record to map the SCC relay URL to the SCC relay endpointngrok-psc-endpointusing its endpoint IP.The SCC relay URL is of the format:

tunnel.<workspace-gcp-region>.gcp.databricks.com. In this example, the SCC relay URL istunnel.us-east4.gcp.databricks.com.Locate the Private Service Connect endpoint IP for the

ngrok-psc-endpointPrivate Service Connect endpoint. In this example, the IP for the Private Service Connect endpointpsc-demo-dp-ngrokis10.10.0.3.Create an

Arecord to maptunnel.us-east4.gcp.databricks.comto10.10.0.3.

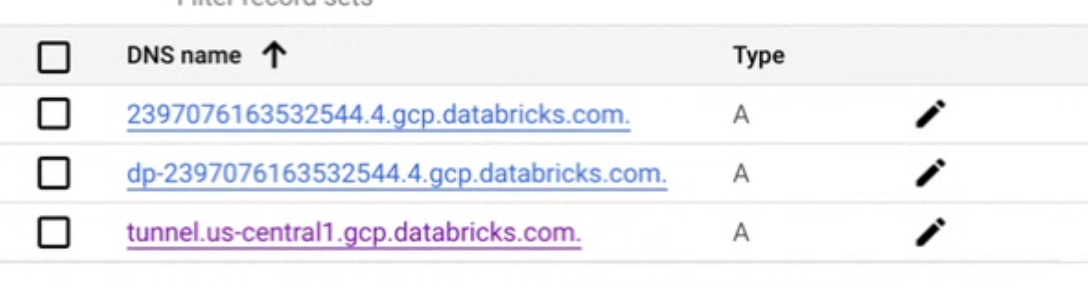

The list of A records in your zone looks generally like the following:

Validate your DNS configuration

In your VPC networks, make sure your DNS are configured correctly:

In your transit VPC network, use the nslookup tool to confirm the following URLs now resolve to the front-end Private Service Connect endpoint IP.

<workspace-url>dp-<workspace-url><workspace-gcp-region>.psc-auth.gcp.databricks.com

In your compute plane VPC network, use the nslookup tool to confirm the following URLs resolve to the correct Private Service Connect endpoint IP

<workspace-url>maps to the Private Service Connect endpoint IP for the endpoint withplproxy-psc-endpoint-all-portsin its name.dp-<workspace-url>maps to the Private Service Connect endpoint IP for the endpoint withplproxy-psc-endpoint-all-portsin its name.tunnel.<workspace-gcp-region>.gcp.databricks.commaps to the Private Service Connect endpoint IP for the endpoint withngrok-psc-endpointin its name.

Intermediate DNS name for Private Service Connect

The intermediate DNS name for workspaces that enable either back-end or front-end Private Service Connect is <workspace-gcp-region>.psc.gcp.databricks.com. This allows you to separate out traffic for the workspaces that they need to access, from other Databricks services that don’t support Private Service Connect, such as documentation.

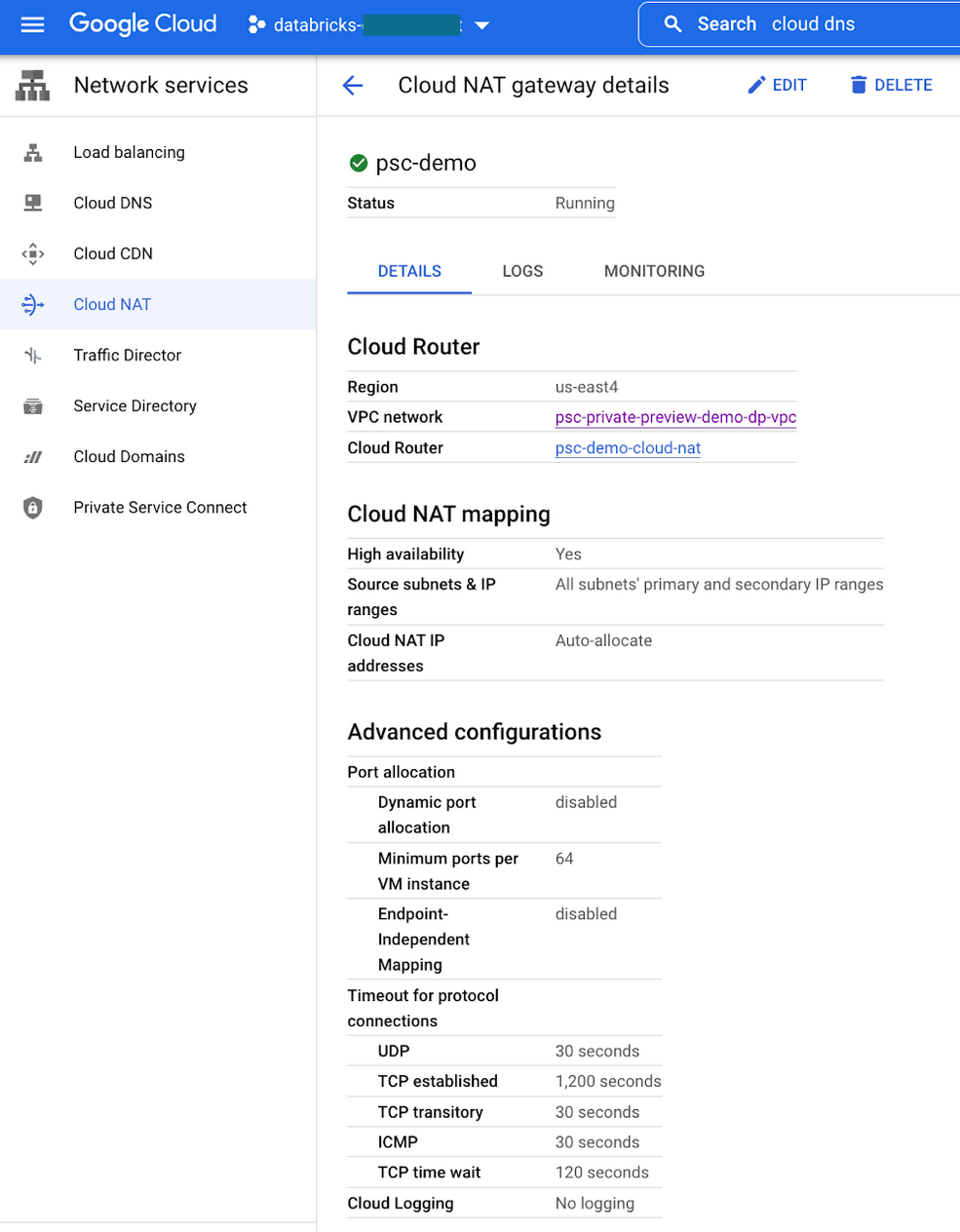

Step 11 (optional): Configure metastore access

Features such as SQL access control lists (ACLs) require access to the metastore. Since the compute plane VPC cannot access the public internet by default, you must create a Cloud NAT with access to the metastore. See Control plane service endpoint IP addresses by region.

You may additionally configure a firewall to prevent ingress and egress traffic from all other sources. Alternatively, if you do not want to configure a Cloud NAT for their VPC, another option is to configure a private connection to an external metastore.

Step 12 (optional): Configure IP access lists

Front-end connections from the user to Private Service Connect workspaces allow public access by default.

You can configure to allow or deny public access to a workspace when you create a private access settings object. See Step 6: Create a Databricks private access settings object.

If you choose to deny public access, no public access to the workspace is allowed.

If you choose to allow public access, you can configure IP access lists for your Databricks workspace. IP access lists only apply to requests over the internet originating from public IP addresses. You cannot use IP access lists to block private traffic from Private Service Connect.

To block all access from the internet:

Enable IP access lists for the workspace. See Configure IP access lists for workspaces.

Create a

BLOCK 0.0.0.0/0IP access list.

Note that requests from VPC networks connected using Private Service Connect are not affected by IP access lists. Connections are authorized using the Private Service Connect access level configuration. See related section Step 6: Create a Databricks private access settings object.

Step 13 (optional): Configure VPC Service Controls

In addition to using Private Service Connect to privately connect to the Databricks service, you can configure VPC Service Controls to keep your traffic private and mitigate data exfiltration risks.

Configure back-end private access from the compute plane VPC to Cloud Storage

You can configure Private Google Access or Private Service Connect to privately access cloud storage resources from your compute plane VPC.

Add your compute plane projects to a VPC Service Controls Service Perimeter

For each Databricks workspace, you can add the following Google Cloud projects to a VPC Service Controls service perimeter:

Compute plane VPC host project

Project containing the workspace storage bucket

Service projects containing the compute resources of the workspace

With this configuration, you need to grant access to both of the following:

The compute resources and workspace storage bucket from the Databricks control plane

Databricks-managed storage buckets from the compute plane VPC

You can grant the above access with the following ingress and egress rules on the above VPC Service Controls service perimeter.

To get the project numbers for these ingress and egress rules, see Private Service Connect (PSC) attachment URIs and project numbers.

Ingress rule

You need to add an ingress rule to grant access to your VPC Service Controls Service Perimeter from the Databricks control plane VPC. The following is an example ingress rule:

From:

Identities: ANY_IDENTITY

Source > Projects =

<regional-control-plane-vpc-host-project-number>

<regional-control-plane-uc-project-number>

<regional-control-plane-audit-log-delivery-project-number>

To:

Projects =

<list of compute plane Project Ids>

Services =

Service name: storage.googleapis.com

Service methods: All actions

Service name: compute.googleapis.com

Service methods: All actions

Service name: container.googleapis.com

Service methods: All actions

Service name: logging.googleapis.com

Service methods: All actions

Service name: cloudresourcemanager.googleapis.com

Service methods: All actions

Service name: iam.googleapis.com

Service methods: All actions

Egress rule

You need to add an egress rule to grant access to Databricks-managed storage buckets from the compute plane VPC. The following is an example egress rule:

From:

Identities: ANY_IDENTITY

To:

Projects =

<regional-control-plane-asset-project-number>

<regional-control-plane-vpc-host-project-number>

Services =

Service name: storage.googleapis.com

Service methods: All actions

Service name: artifactregistry.googleapis.com

Service methods:

artifactregistry.googleapis.com/DockerRead'

Access data lake storage buckets secured by VPC Service Controls

You can add the Google Cloud projects containing the data lake storage buckets to a VPC Service Controls Service Perimeter.

You do not require any additional ingress or egress rules if the data lake storage buckets and the Databricks workspace projects are in the same VPC Service Controls Service Perimeter.

If the data lake storage buckets are in a separate VPC Service Controls Service Perimeter, you need to configure the following:

Ingress rules on data lake Service Perimeter:

Allow access to Cloud Storage from the Databricks compute plane VPC

Allow access to Cloud Storage from the Databricks control plane VPC using the project IDs documented on the regions page. This access will be required as Databricks introduces new data governance features such as Unity Catalog.

Egress rules on Databricks compute plane Service Perimeter:

Allow egress to Cloud Storage on data lake Projects