Create and manage catalogs

This article shows how to create and manage catalogs in Unity Catalog. A catalog contains schemas (databases), and a schema contains tables, views, volumes, models, and functions.

Note

In workspaces that were enabled for Unity Catalog automatically, a workspace catalog was created for you by default. All users in your workspace (and only your workspace) have access to it by default. See Step 1: Confirm that your workspace is enabled for Unity Catalog.

Note

To learn how to create a foreign catalog, a Unity Catalog object that mirrors a database in an external data system, see Create a foreign catalog. See also Manage and work with foreign catalogs.

Requirements

To create a catalog, regardless of catalog type:

You must be a Databricks metastore admin or have the

CREATE CATALOGprivilege on the metastore.You must have a Unity Catalog metastore linked to the workspace where you perform the catalog creation.

The compute resource that you use to run a notebook to create a catalog must be on Databricks Runtime 11.3 or above and must use a Unity Catalog-compliant access mode. See Access modes. SQL warehouses always support Unity Catalog.

To create a shared catalog:

The Delta Sharing share must already exist in your workspace. See How do I make shared data available to my team?.

You must be a metastore admin, have the

USE PROVIDERprivilege on the metastore, or own the provider object that includes the share.

To create a standard catalog:

If you specify a managed storage location for the catalog, you must have the

CREATE MANAGED STORAGEprivilege on the target external location.If no metastore-level managed storage exists, then you must specify a managed storage location for the catalog.

To create a foreign catalog:

You must be either the owner of the connection that you use to create the foreign catalog or have the

CREATE FOREIGN CATALOGprivilege on the connection.You must use compute on Databricks Runtime 13.1 or above. SQL warehouses must be Pro or Serverless.

Create a catalog

To create a catalog, you can use Catalog Explorer, a SQL command, the REST API, the Databricks CLI, or Terraform. When you create a catalog, two schemas (databases) are automatically created: default and information_schema.

Log in to a workspace that is linked to the metastore.

Click

Catalog.

Catalog.Click the Create Catalog button.

On the Create a new catalog dialog, enter a Catalog name and select the catalog Type that you want to create:

Standard catalog: a securable object that organizes data and AI assets that are managed by Unity Catalog. For all use cases except Lakehouse Federation and catalogs created from Delta Sharing shares.

Foreign catalog: a securable object that mirrors a database in an external data system using Lakehouse Federation. See Overview of Lakehouse Federation setup.

Shared catalog: a securable object that organizes data and other assets that are shared with you as a Delta Sharing share. Creating a catalog from a share makes those assets available for users in your workspace to read. See What is a share?.

Depending on the catalog type, specify the following options:

For standard catalogs: It is optional but strongly recommended that you specify a managed Storage location. You must have the

CREATE MANAGED STORAGEprivilege on the target external location that you use as a managed storage location. See Specify a managed storage location in Unity Catalog.You can optionally specify a subpath of a defined external location. If you don’t have an external location defined, you can create one by clicking Create a new external location.

Important

If your workspace does not have a metastore-level storage location, you must specify a managed storage location when you create a catalog.

For foreign catalogs: You must select a Connection, which is a securable object in Unity Catalog that specifies a path and credentials for accessing an external database system. See Overview of Lakehouse Federation setup.

When you’ve selected the connection, enter the name of the Database or other Databricks Catalog that you want to mirror.

Requirements differ depending on the data source:

MySQL uses a two-layer namespace and therefore does not require a database name.

For connections to a catalog in another Databricks workspace, enter the Databricks Catalog name instead of a database name.

If you don’t have a connection defined, you can create one by clicking Create a new connection.

For shared catalogs: You must select a Provider and a Share. Once you have selected the provider, you can select one of the provider’s shares.

If you aren’t sure which provider and share you want, you might find it easier to browse or search for providers and shares using the Catalog Explorer browser. See View providers and View shares that a provider has shared with you.

Click Create.

On the Catalog created! dialog, click View catalog to accept the catalog as automatically configured, or click Configure catalog (recommended) to configure catalog permissions, workspace bindings, and metadata.

If you click View catalog, the catalog will be accessible to all workspaces attached to the metastore, and the

BROWSEprivilege will be granted to all account users. You can skip the rest of the steps in this procedure and proceed to adding schemas to the catalog. See Create and manage schemas (databases).You can return to the catalog details page in Catalog Explorer at any time to update the owner, tags, comments, workspace bindings, permissions, and schemas.

On the Configure catalog > Workspaces page, specify the workspace that the catalog is bound to.

By default, the catalog is shared with all workspaces attached to the current metastore. If the catalog will contain data that should be restricted to specific workspaces, clear the All workspace have access option and use the Assign to workspaces button to add those workspaces. The current workspace must be included.

After you assign a workspace, you can optionally change its default Read & Write access level to Read Only: select the workspace from the list and click the Manage Access Level button.

For more information, see (Optional) Assign a catalog to specific workspaces.

Click Next to assign Permissions for your catalog.

All account users have the

BROWSEprivilege by default, giving them the ability to discover catalog metadata without having read access to the data.To grant a privilege to a user, group, or service principal, click Grant and follow the instructions in Unity Catalog privileges and securable objects.

Click Next to add optional tag and comment Metadata.

Tags are key-value pairs that you can use to organize and categorize catalogs to simplify search and discovery of your data assets. Values are optional. See Apply tags to Unity Catalog securable objects.

A Comment is an open-ended field that is often used to provide a description to help users discover the data assets in the catalog. See Document data in Catalog Explorer using markdown comments.

Click Save.

Add schemas and data objects to the catalog.

You can use Catalog Explorer to update the owner, tags, comments, workspace bindings, and permissions any time after you have created the catalog.

To create a standard catalog, run the following SQL command in a notebook or SQL query editor. Items in brackets are optional. Replace the placeholder values:

<catalog-name>: A name for the catalog.<location-path>: Optional but strongly recommended. Provide a storage location path if you want managed tables in this catalog to be stored in a location that is different than the default root storage configured for the metastore.Important

If your workspace does not have a metastore-level storage location, you must specify a managed storage location when you create a catalog.

This path must be defined in an external location configuration, and you must have the

CREATE MANAGED STORAGEprivilege on the external location configuration. You can use the path that is defined in the external location configuration or a subpath (in other words,'gs://depts/finance'or'gs://depts/finance/product').<comment>: Optional description or other comment.

CREATE CATALOG [ IF NOT EXISTS ] <catalog-name>

[ MANAGED LOCATION '<location-path>' ]

[ COMMENT <comment> ];

For example, to create a catalog named example:

CREATE CATALOG IF NOT EXISTS example;

To create a shared catalog, run the following command in a notebook or SQL query editor. Items in brackets are optional.

CREATE CATALOG [IF NOT EXISTS] <catalog-name>

USING SHARE <provider-name>.<share-name>;

[ COMMENT <comment> ];

To create a foreign catalog, run the following command in a notebook or SQL query editor. Items in brackets are optional. Replace the placeholder values:

<catalog-name>: Name for the catalog in Databricks.<connection-name>: The connection object that specifies the data source, path, and access credentials.<database-name>: Name of the database you want to mirror as a catalog in Databricks. Not required for MySQL, which uses a two-layer namespace. For Databricks-to-Databricks Lakehouse Federation, usecatalog '<external-catalog-name>'instead.<external-catalog-name>: Databricks-to-Databricks only: Name of the catalog in the external Databricks workspace that you are mirroring. See Run federated queries on another Databricks workspace.

CREATE FOREIGN CATALOG [IF NOT EXISTS] <catalog-name> USING CONNECTION <connection-name>

OPTIONS [(database '<database-name>') | (catalog '<external-catalog-name>')];

See also Create a foreign catalog.

To limit catalog access to specific workspaces in your account, also known as workspace-catalog binding, see Bind a catalog to one or more workspaces.

For detailed parameter descriptions, see CREATE CATALOG.

After you’ve created the catalog, assign privileges to the catalog. See Unity Catalog privileges and securable objects.

Terraform: You can create a catalog using the Databricks Terraform provider and databricks_catalog. You can retrieve information about catalogs using databricks_catalogs.

REST API: Use POST /api/2.1/unity-catalog/catalogs.

Databricks CLI: databricks catalogs create <catalog-name> [options]

For a list of options, run databricks catalogs create -h. Options are also described in the API reference. Follow the API link in the preceding paragraph.

After you’ve created the catalog, assign privileges to the catalog. See Unity Catalog privileges and securable objects.

(Optional) Assign a catalog to specific workspaces

If you use workspaces to isolate user data access, you may want to limit catalog access to specific workspaces in your account, also known as workspace-catalog binding. The default is to share the catalog with all workspaces attached to the current metastore.

The exception to this default is the workspace catalog that is created by default in workspaces that are enabled for Unity Catalog automatically (see Automatic enablement of Unity Catalog). By default, this workspace catalog is bound only to your workspace, unless you choose to give other workspaces access to it. For important information about assigning permissions if you unbind this catalog, see Unbind a catalog from a workspace.

You can allow read and write access to the catalog from a workspace (the default), or you can specify read-only access. If you specify read-only, then all write operations are blocked from that workspace to that catalog.

Typical use cases for binding a catalog to specific workspaces include:

Ensuring that users can only access production data from a production workspace environment.

Ensuring that users can only process sensitive data from a dedicated workspace.

Giving users read-only access to production data from a developer workspace to enable development and testing.

Note

You can also bind external locations and storage credentials to specific workspaces, limiting the ability to access data in external locations to privileged users in those workspaces. See (Optional) Assign an external location to specific workspaces and (Optional) Assign a storage credential to specific workspaces.

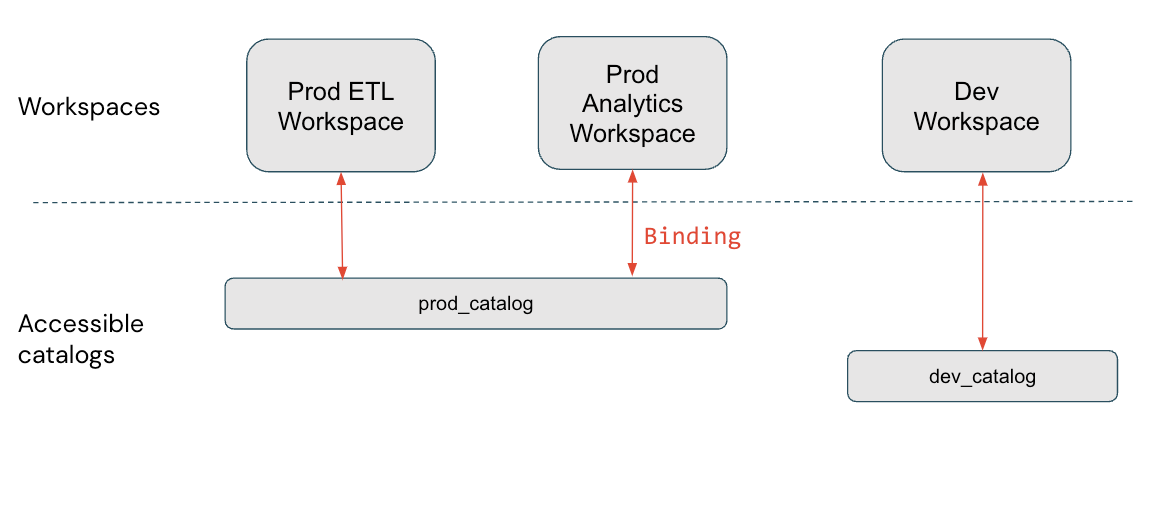

Workspace-catalog binding example

Take the example of production and development isolation. If you specify that your production data catalogs can only be accessed from production workspaces, this supersedes any individual grants that are issued to users.

In this diagram, prod_catalog is bound to two production workspaces. Suppose a user has been granted access to a table in prod_catalog called my_table (using GRANT SELECT ON my_table TO <user>). If the user tries to access my_table in the Dev workspace, they receive an error message. The user can access my_table only from the Prod ETL and Prod Analytics workspaces.

Workspace-catalog bindings are respected in all areas of the platform. For example, if you query the information schema, you see only the catalogs accessible in the workspace where you issue the query. Data lineage and search UIs likewise show only the catalogs that are assigned to the workspace (whether using bindings or by default).

Bind a catalog to one or more workspaces

To assign a catalog to specific workspaces, you can use Catalog Explorer or the Databricks CLI.

Permissions required: Metastore admin or catalog owner.

Note

Metastore admins can see all catalogs in a metastore using Catalog Explorer—and catalog owners can see all catalogs they own in a metastore—regardless of whether the catalog is assigned to the current workspace. Catalogs that are not assigned to the workspace appear grayed out, and no child objects are visible or queryable.

Log in to a workspace that is linked to the metastore.

Click

Catalog.

Catalog.In the Catalog pane, on the left, click the catalog name.

The main Catalog Explorer pane defaults to the Catalogs list. You can also select the catalog there.

On the Workspaces tab, clear the All workspaces have access checkbox.

If your catalog is already bound to one or more workspaces, this checkbox is already cleared.

Click Assign to workspaces and enter or find the workspaces you want to assign.

(Optional) Limit workspace access to read-only.

On the Manage Access Level menu, select Change access to read-only.

You can reverse this selection at any time by editing the catalog and selecting Change access to read & write.

To revoke access, go to the Workspaces tab, select the workspace, and click Revoke.

There are two Databricks CLI command groups and two steps required to assign a catalog to a workspace.

In the following examples, replace <profile-name> with the name of your Databricks authentication configuration profile. It should include the value of a personal access token, in addition to the workspace instance name and workspace ID of the workspace where you generated the personal access token. See Databricks personal access token authentication.

Use the

catalogscommand group’supdatecommand to set the catalog’sisolation modetoISOLATED:databricks catalogs update <my-catalog> \ --isolation-mode ISOLATED \ --profile <profile-name>

The default

isolation-modeisOPENto all workspaces attached to the metastore.Use the

workspace-bindingscommand group’supdate-bindingscommand to assign the workspaces to the catalog:databricks workspace-bindings update-bindings catalog <my-catalog> \ --json '{ "add": [{"workspace_id": <workspace-id>, "binding_type": <binding-type>}...], "remove": [{"workspace_id": <workspace-id>, "binding_type": "<binding-type>}...] }' --profile <profile-name>

Use the

"add"and"remove"properties to add or remove workspace bindings. The<binding-type>can be either“BINDING_TYPE_READ_WRITE”(default) or“BINDING_TYPE_READ_ONLY”.

To list all workspace assignments for a catalog, use the workspace-bindings command group’s get-bindings command:

databricks workspace-bindings get-bindings catalog <my-catalog> \

--profile <profile-name>

Unbind a catalog from a workspace

Instructions for revoking workspace access to a catalog using Catalog Explorer or the workspace-bindings CLI command group are included in Bind a catalog to one or more workspaces.

Important

If your workspace was enabled for Unity Catalog automatically and you have a workspace catalog, workspace admins own that catalog and have all permissions on that catalog in the workspace only. If you unbind that catalog or bind it to other catalogs, you must grant any required permissions manually to the members of the workspace admins group as individual users or using account-level groups, because the workspace admins group is a workspace-local group. For more information about account groups vs workspace-local groups, see Difference between account groups and workspace-local groups.

Add schemas to your catalog

To learn how to add schemas (databases) to your catalog. see Create and manage schemas (databases).

View catalog details

To view information about a catalog, you can use Catalog Explorer or a SQL command.

Log in to a workspace that is linked to the metastore.

Click

Catalog.

Catalog.In the Catalog pane, find the catalog and click its name.

Some details are listed at the top of the page. Others can be viewed on the Schemas, Details, Permissions, and Workspaces tabs.

Run the following SQL command in a notebook or Databricks SQL editor. Items in brackets are optional. Replace the placeholder <catalog-name>.

For details, see DESCRIBE CATALOG.

DESCRIBE CATALOG <catalog-name>;

Use CATALOG EXTENDED to get full details.

Delete a catalog

To delete (or drop) a catalog, you can use Catalog Explorer or a SQL command. To drop a catalog you must be its owner.

You must delete all schemas in the catalog except information_schema before you can delete a catalog. This includes the auto-created default schema.

Log in to a workspace that is linked to the metastore.

Click

Catalog.

Catalog.In the Catalog pane, on the left, click the catalog you want to delete.

In the detail pane, click the kebab menu

to the left of the Create database button and select Delete.

to the left of the Create database button and select Delete.On the Delete catalog dialog, click Delete.

Run the following SQL command in a notebook or Databricks SQL editor. Items in brackets are optional. Replace the placeholder <catalog-name>.

For parameter descriptions, see DROP CATALOG.

If you use DROP CATALOG without the CASCADE option, you must delete all schemas in the catalog except information_schema before you can delete the catalog. This includes the auto-created default schema.

DROP CATALOG [ IF EXISTS ] <catalog-name> [ RESTRICT | CASCADE ]

For example, to delete a catalog named vaccine and its schemas:

DROP CATALOG vaccine CASCADE

Manage the default catalog

A default catalog is configured for each workspace that is enabled for Unity Catalog. The default catalog lets you perform data operations without specifying a catalog. If you omit the top-level catalog name when you perform data operations, the default catalog is assumed.

A workspace admin can view or switch the default catalog using the Admin Settings UI. You can also set the default catalog for a cluster using a Spark config.

Commands that do not specify the catalog (for example GRANT CREATE TABLE ON SCHEMA myschema TO mygroup) are evaluated for the catalog in the following order:

Is the catalog set for the session using a

USE CATALOGstatement or a JDBC setting?Is the Spark configuration

spark.databricks.sql.initial.catalog.namespaceset on the cluster?Is there a workspace default catalog set for the cluster?

The default catalog configuration when Unity Catalog is enabled

The default catalog that was initially configured for your workspace depends on how your workspace was enabled for Unity Catalog:

For some workspaces that were enabled for Unity Catalog automatically, the workspace catalog was set as the default catalog. See Automatic enablement of Unity Catalog.

For all other workspaces, the

hive_metastorecatalog was set as the default catalog.

If you are transitioning from the Hive metastore to Unity Catalog within an existing workspace, it typically makes sense to use hive_metastore as the default catalog to avoid impacting existing code that references the hive metastore.

Change the default catalog

A workspace admin can change the default catalog for the workspace. Anyone with permission to create or edit a cluster can set a different default catalog for the cluster.

Warning

Changing the default catalog can break existing data operations that depend on it.

To configure a different default catalog for a workspace:

Log in to your workspace as a workspace admin.

Click your username in the top bar of the workspace and select Settings from the dropdown.

Click the Advanced tab.

On the Default catalog for the workspace row, enter the catalog name and click Save.

Restart your SQL warehouses and clusters for the change to take effect. All new and restarted SQL warehouses and clusters will use this catalog as the workspace default.

You can also override the default catalog for a specific cluster by setting the following Spark configuration on the cluster. This approach is not available for SQL warehouses:

spark.databricks.sql.initial.catalog.name

For instructions, see Spark configuration.

View the current default catalog

To get the current default catalog for your workspace, you can use a SQL statement in a notebook or SQL Editor query. A workspace admin can get the default catalog using the Admin Settings UI.

Log in to your workspace as a workspace admin.

Click your username in the top bar of the workspace and select Settings from the dropdown.

Click the Advanced tab.

On the Default catalog for the workspace row, view the catalog name.

Run the following command in a notebook or SQL Editor query that is running on a SQL warehouse or Unity Catalog-compliant cluster. The workspace default catalog is returned as long as no USE CATALOG statement or JDBC setting has been set on the session, and as long as no spark.databricks.sql.initial.catalog.namespace config is set for the cluster.

SELECT current_catalog();