Configure audit log delivery

Note

This feature requires the Premium plan.

This article describes how to configure delivery of audit logs.

Databricks provides access to audit logs of activities performed by Databricks users, allowing your enterprise to monitor detailed Databricks usage patterns. For details about logged events, see Audit log reference.

As a Databricks account owner or account admin, you can configure delivery of audit logs in JSON file format to a Google Cloud Storage (GCS) storage bucket, where you can make the data available for usage analysis. Databricks delivers a separate JSON file for each workspace in your account and a separate file for account-level events.

Set up audit log delivery

To configure audit log delivery, you must set up a GCS bucket, give Databricks access to the bucket, and then use the account console to define a log delivery configuration that tells Databricks where to deliver your logs.

You cannot edit a log delivery configuration after creation, but you can temporarily or permanently disable a log delivery configuration using the account console. You can have a maximum of two currently-enabled audit log delivery configurations.

You can use the Google Cloud Console or the Google CLI to create a Google Cloud Storage bucket in your GCP account. The following instructions assume you will use the Google Cloud Console.

Create and configure your GCS bucket

Use the Google Cloud Console to create a Google Cloud Storage bucket in your GCP account.

For region, choose multi-region.

For storage class, choose Standard for typical usage. See the Google article for storage classes.

For control access, choose Uniform.

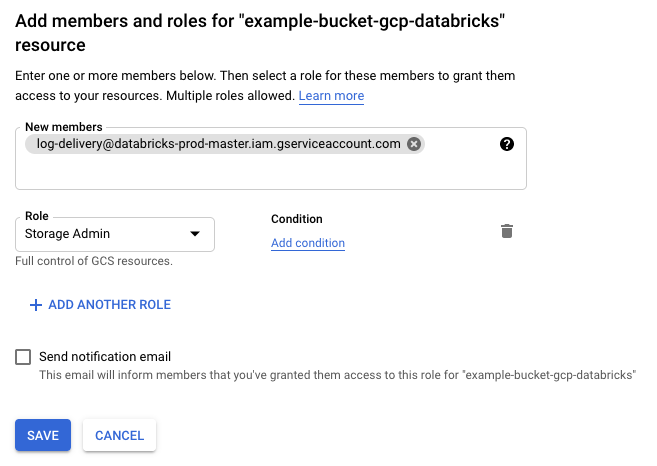

Click the Permissions tab on your new bucket.

Click ADD, and then enter the Service Account

log-delivery@databricks-prod-master.iam.gserviceaccount.comasNew memberof the storage bucket. Grant the Service Account theStorage Adminrole underCloud Storage, without specifying an access condition.This is required for Databricks to write and list the delivered log files for this bucket. You cannot give permission to only a bucket subdirectory. See the Google article about access control, which recommends that you create multiple buckets for granular access permissions.

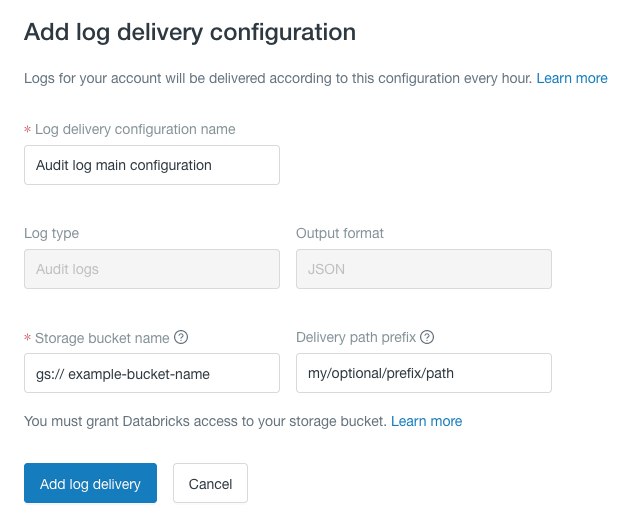

Create a log delivery configuration

A log delivery configuration defines the path to the GCS bucket location where you want Databricks to deliver your audit logs.

As an account admin, log in to the Databricks account console.

Click Settings.

Click Log delivery.

Click Add log delivery.

In Log delivery configuration name, add a name that is unique within your Databricks account. Spaces are allowed.

In GCS bucket name, specify your GCS bucket name.

In Delivery path prefix, optionally specify a prefix to be used in the path. See Location.

The prefix can include forward slash characters but cannot start with a slash. Otherwise, the prefix can include any valid GCS object path characters. Note that space characters are not allowed.

Click Add log delivery.

Disable or enable a log delivery configuration

You cannot edit or delete a log delivery configuration after creation, but you can temporarily or permanently disable a log delivery configuration using the account console. You can have a maximum of two enabled audit log delivery configurations at a time.

To disable a log delivery configuration:

As an account admin, log in to the Databricks account console.

Click Settings.

Click Log delivery.

Next to the log delivery configuration you want to disable, click the kebab menu

to the right of the name.

to the right of the name.To disable it, select Disable log delivery.

To enable it, select Enable log delivery.

Latency

Up to one hour after log delivery configuration, audit delivery begins and you can access the JSON files.

After audit log delivery begins, auditable events are typically logged within one hour. New JSON files potentially overwrite existing files for each workspace. Overwriting ensures exactly-once semantics without requiring read or delete access to your account.

Enabling or disabling a log delivery configuration can take up to an hour to take effect.

Location

The delivery location is:

gs://<bucket-name>/<delivery-path-prefix>/workspaceId=<workspaceId>/date=<yyyy-mm-dd>/auditlogs_<internal-id>.json

If the optional delivery path prefix is omitted, the delivery path does not include <delivery-path-prefix>/.

Account-level audit events that are not associated with any single workspace are delivered to the workspaceId=0 partition.

For more information about analyzing audit logs using Databricks, see Audit log system table reference.