Compute features on demand using Python user-defined functions

This article describes how to create and use on-demand features in Databricks.

On-demand features are generated from feature values and model inputs. They are not materialized, and are instead computed on-demand during model training and during inference. A typical use case is to post-process existing features, for example to parse out a field from a JSON string. You can also use on-demand features to compute new features based on transformations of existing features, such as normalizing values in an array.

In Databricks, you use Python user-defined functions (UDFs) to specify how to calculate on-demand features. These functions are governed by Unity Catalog and discoverable through Catalog Explorer.

To use on-demand features, your workspace must be enabled for Unity Catalog and you must use Databricks Runtime 13.3 LTS ML or above.

Workflow

To compute features on-demand, you specify a Python user-defined function (UDF) that describes how to calculate the feature values.

During training, you provide this function and its input bindings in the

feature_lookupsparameter of thecreate_training_setAPI.You must log the trained model using the Feature Store method

log_model. This ensures that the model automatically evaluates on-demand features when it is used for inference.For batch scoring, the

score_batchAPI automatically calculates and returns all feature values, including on-demand features.

Create a Python UDF

You can create a Python UDF in a notebook or in Databricks SQL.

For example, running the following code in a notebook cell creates the Python UDF example_feature in the catalog main and schema default.

%sql

CREATE FUNCTION main.default.example_feature(x INT, y INT)

RETURNS INT

LANGUAGE PYTHON

COMMENT 'add two numbers'

AS $$

def add_numbers(n1: int, n2: int) -> int:

return n1 + n2

return add_numbers(x, y)

$$

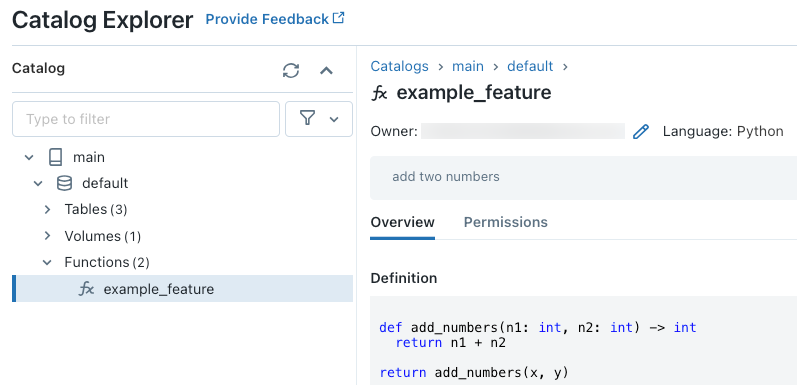

After running the code, you can navigate through the three-level namespace in Catalog Explorer to view the function definition:

For more details about creating Python UDFs, see Register a Python UDF to Unity Catalog and the SQL language manual.

Train a model using on-demand features

To train the model, you use a FeatureFunction, which is passed to the create_training_set API in the feature_lookups parameter.

The following example code uses the Python UDF main.default.example_feature that was defined in the previous section.

# Install databricks-feature-engineering first with:

# %pip install databricks-feature-engineering

# dbutils.library.restartPython()

from databricks.feature_engineering import FeatureEngineeringClient

from databricks.feature_engineering import FeatureFunction, FeatureLookup

from sklearn import linear_model

fe = FeatureEngineeringClient()

features = [

# The feature 'on_demand_feature' is computed as the sum of the the input value 'new_source_input'

# and the pre-materialized feature 'materialized_feature_value'.

# - 'new_source_input' must be included in base_df and also provided at inference time.

# - For batch inference, it must be included in the DataFrame passed to 'FeatureEngineeringClient.score_batch'.

# - For real-time inference, it must be included in the request.

# - 'materialized_feature_value' is looked up from a feature table.

FeatureFunction(

udf_name="main.default.example_feature", # UDF must be in Unity Catalog so uses a three-level namespace

input_bindings={

"x": "new_source_input",

"y": "materialized_feature_value"

},

output_name="on_demand_feature",

),

# retrieve the prematerialized feature

FeatureLookup(

table_name = 'main.default.table',

feature_names = ['materialized_feature_value'],

lookup_key = 'id'

)

]

# base_df includes the columns 'id', 'new_source_input', and 'label'

training_set = fe.create_training_set(

df=base_df,

feature_lookups=features,

label='label',

exclude_columns=['id', 'new_source_input', 'materialized_feature_value'] # drop the columns not used for training

)

# The training set contains the columns 'on_demand_feature' and 'label'.

training_df = training_set.load_df().toPandas()

# training_df columns ['materialized_feature_value', 'label']

X_train = training_df.drop(['label'], axis=1)

y_train = training_df.label

model = linear_model.LinearRegression().fit(X_train, y_train)

Log the model and register it to Unity Catalog

Models packaged with feature metadata can be registered to Unity Catalog. The feature tables used to create the model must be stored in Unity Catalog.

To ensure that the model automatically evaluates on-demand features when it is used for inference, you must set the registry URI and then log the model, as follows:

import mlflow

mlflow.set_registry_uri("databricks-uc")

fe.log_model(

model=model,

artifact_path="main.default.model",

flavor=mlflow.sklearn,

training_set=training_set,

registered_model_name="main.default.recommender_model"

)

If the Python UDF that defines the on-demand features imports any Python packages, you must specify these packages using the argument extra_pip_requirements. For example:

import mlflow

mlflow.set_registry_uri("databricks-uc")

fe.log_model(

model=model,

artifact_path="model",

flavor=mlflow.sklearn,

training_set=training_set,

registered_model_name="main.default.recommender_model",

extra_pip_requirements=["scikit-learn==1.20.3"]

)

Example notebook

The following notebook shows how to train and score a model that uses on-demand features.

Limitation

On-demand features can output all data types supported by Feature Store except MapType and ArrayType.