Diagnosing a long stage in Spark

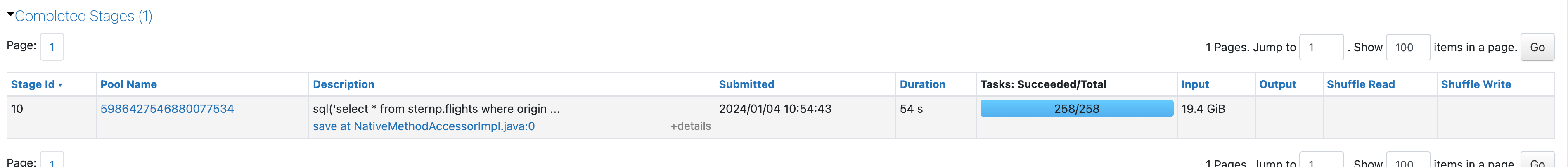

Start by identifying the longest stage of the job. Scroll to the bottom of the job’s page to the list of stages and order them by duration:

Stage I/O details

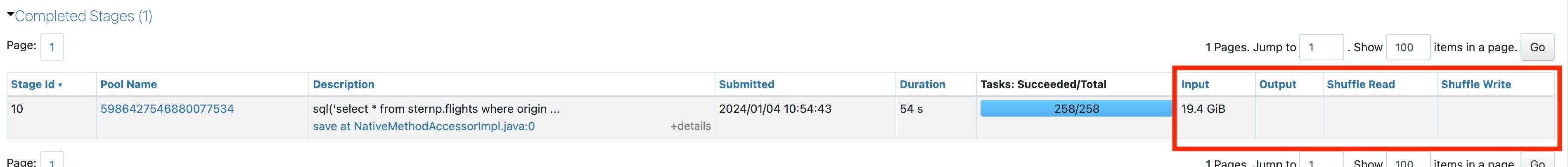

To see high-level data about what this stage was doing, look at the Input, Output, Shuffle Read, and Shuffle Write columns:

The columns mean the following:

Input: How much data this stage read from storage. This could be reading from Delta, Parquet, CSV, etc.

Output: How much data this stage wrote to storage. This could be writing to Delta, Parquet, CSV, etc.

Shuffle Read: How much shuffle data this stage read.

Shuffle Write: How much shuffle data this stage wrote.

If you’re not familiar with what shuffle is, now is a good time to learn what that means.

Make note of these numbers as you’ll likely need them later.

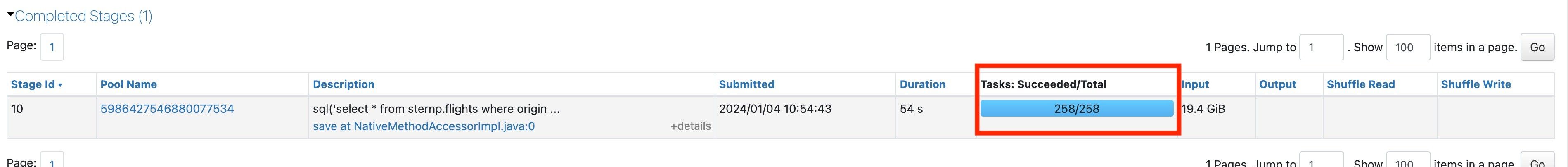

Number of tasks

The number of tasks in the long stage can point you in the direction of your issue. You can determine the number of tasks by looking here:

If you see one task, that could be a sign of a problem. For more information, see One Spark task.

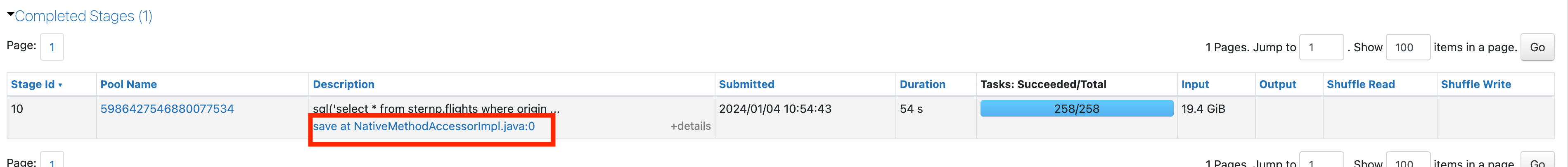

View more stage details

If the stage has more than one task, you should investigate further. Click on the link in the stage’s description to get more info about the longest stage:

Now that you’re in the stage’s page, see Skew and spill.